Page 11274

Apr 12, 2016

Is the Universe a Simulation? Scientists Debate

Posted by Sean Brazell in categories: biotech/medical, information science, mobile phones, neuroscience, space

Hmm… That would explain Alzheimer disease — It’d be like some sort of unabashedly evil version of a smart phone data caps!

Or not.

Continue reading “Is the Universe a Simulation? Scientists Debate” »

Apr 12, 2016

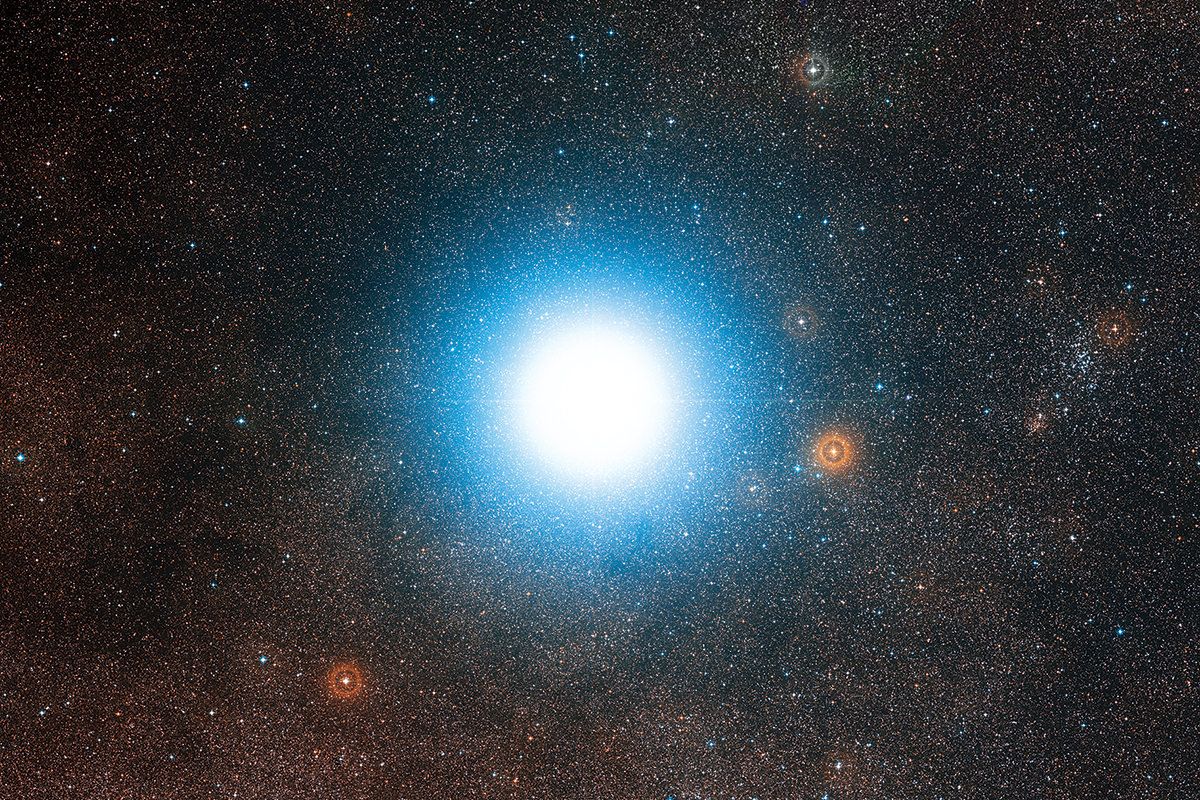

Billionaire pledges $100m to send spaceships to Alpha Centauri

Posted by Sean Brazell in category: space travel

Regardless of whether or not this particular project achieves it’s aims, hopefully it will encourage other obscenely wealthy philanthropists to funnel their donations into private (or governmental ) outer-space related endeavors!

Yuri Milner has backed a plan to research sending tiny, laser-powered spacecraft to the nearest star at 20 per cent of the speed of light.

Apr 12, 2016

$100-Million Plan Will Send Probes to the Nearest Star

Posted by Andreas Matt in category: innovation

Funded by Russian entrepreneur Yuri Milner and with the blessing of Stephen Hawking, Breakthrough Starshot aims to send probes to Alpha Centauri in a generation.

By Lee Billings on April 12, 2016.

Apr 12, 2016

Inertia as a zero-point-field Lorentz force

Posted by Andreas Matt in categories: materials, particle physics

Older, but interesting…

Under the hypothesis that ordinary matter is ultimately made of subelementary constitutive primary charged entities or ‘‘partons’’ bound in the manner of traditional elementary Planck oscillators (a time-honored classical technique), it is shown that a heretofore uninvestigated Lorentz force (specifically, the magnetic component of the Lorentz force) arises in any accelerated reference frame from the interaction of the partons with the vacuum electromagnetic zero-point field (ZPF). Partons, though asymptotically free at the highest frequencies, are endowed with a sufficiently large ‘‘bare mass’’ to allow interactions with the ZPF at very high frequencies up to the Planck frequencies. This Lorentz force, though originating at the subelementary parton level, appears to produce an opposition to the acceleration of material objects at a macroscopic level having the correct characteristics to account for the property of inertia. We thus propose the interpretation that inertia is an electromagnetic resistance arising from the known spectral distortion of the ZPF in accelerated frames. The proposed concept also suggests a physically rigorous version of Mach’s principle. Moreover, some preliminary independent corroboration is suggested for ideas proposed by Sakharov (Dokl. Akad. Nauk SSSR 177, 70 (1968) [Sov. Phys. Dokl. 12, 1040 (1968)]) and further explored by one of us [H. E. Puthoff, Phys. Rev. A 39, 2333 (1989)] concerning a ZPF-based model of Newtonian gravity, and for the equivalence of inertial and gravitational mass as dictated by the principle of equivalence.

Apr 12, 2016

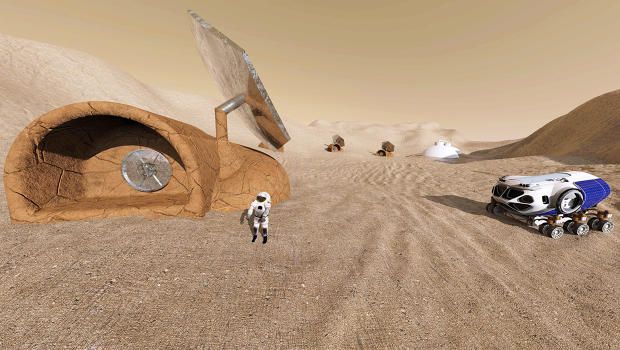

RedWorks Wants To Build Your First Home On Mars

Posted by Klaus Baldauf in categories: 3D printing, space

NASA projects we’ll be on Mars by the 2030s. RedWorks has created a 3D printing system to build your first home on the red planet.

Apr 12, 2016

Alejandro Aravena, Winner of This Year’s Pritzker Prize, Is Giving Away His Designs — By Margaret Rhodes | Wired

Posted by Odette Bohr Dienel in categories: architecture, open source

“This January, Alejandro Aravena received architecture’s highest honor. This week, the Chilean architect announced that his studio, Elemental, will open-source four of its affordable housing designs. The projects can be downloaded, for free, from Elemental’s website (here).”

Apr 12, 2016

Can optical technology solve the high performance computing energy conundrum?

Posted by Karen Hurst in categories: energy, quantum physics, supercomputing

Another pre-Quantum Computing interim solution for super computing. So, we have this as well as Nvidia’s GPU. Wonder who else?

In summer 2015, US president Barack Obama signed an order intended to provide the country with an exascale supercomputer by 2025. The machine would be 30 times more powerful than today’s leading system: China’s Tianhe-2. Based on extrapolations of existing electronic technology, such a machine would draw close to 0.5GW – the entire output of a typical nuclear plant. It brings into question the sustainability of continuing down the same path for gains in computing.

One way to reduce the energy cost would be to move to optical interconnect. In his keynote at OFC in March 2016, Professor Yasuhiko Arakawa of University of Tokyo said high performance computing (HPC) will need optical chip to chip communication to provide the data bandwidth for future supercomputers. But digital processing itself presents a problem as designers try to deal with issues such as dark silicon – the need to disable large portions of a multibillion transistor processor at any one time to prevent it from overheating. Photonics may have an answer there as well.

Continue reading “Can optical technology solve the high performance computing energy conundrum?” »

Apr 12, 2016

The Ultimate Debate – Interconnect Offloading Versus Onloading

Posted by Karen Hurst in categories: computing, quantum physics

When I read articles like this one; I wonder if folks really fully understand the full impact of what Quantum brings to all things in our current daily lives.

The high performance computing market is going through a technology transition – the Co-Design transition. As has already been discussed in many articles, this transition has emerged in order to solve the performance bottlenecks of today’s infrastructures and applications, performance bottlenecks that were created by multi-core CPUs and the existing CPU-centric system architecture.

How are multi-core CPUs the source for today’s performance bottlenecks? In order to understand that, we need to go back in time to the era of single-core CPUs. Back then, performance gains came from increases in CPU frequency and from the reduction of networking functions (network adapter and switches). Each new generation of product brought faster CPUs and lower-latency network adapters and switches, and that combination was the main performance factor. But this could not continue forever. The CPU frequency could not be increased any more due to power limitations, and instead of increasing the speed of the application process, we began using more CPU cores in parallel, thereby executing more processes at the same time. This enabled us to continue improving application performance, not by running faster, but by running more at the same time.

Continue reading “The Ultimate Debate – Interconnect Offloading Versus Onloading” »

Apr 12, 2016

Can Artificial Intelligence Be Ethical?

Posted by Karen Hurst in categories: information science, robotics/AI

Good question? Answer as of to date — depends on the “AI creator.” AI today is all dependent upon architects, engineers, etc. design and development and the algorithms & information exposed in AI. Granted we have advanced this technology; however, it is still based on logical design and principles; nothing more.

BTW — here is an example to consider in this argument. If a bank buys a fully functional and autonomous AI; and through audits (such as SOX) it is uncovered that embezzling was done by this AI solution (like in another report 2 weeks ago showing where an AI solution stole money out of customer accounts) who is at fault? Who gets prosecuted? who gets sued? The bank, or the AI technology company; or both? We must be ready to address these types of situations soon and legislation and the courts are going to face some very interesting times in the near future; and consumers will probably take the brunt of the chaos.

A recent experiment in which an artificially intelligent chatbot became virulently racist highlights the challenges we could face if machines ever become superintelligent. As difficult as developing artificial intelligence might be, teaching our creations to be ethical is likely to be even more daunting.

Continue reading “Can Artificial Intelligence Be Ethical?” »