For the past decade, AI has been quietly seeping into daily life, from facial recognition to digital assistants like Siri or Alexa. These largely unregulated uses of AI are highly lucrative for those who control them but are already causing real-world harms to those who are subjected to them: false arrests; health care discrimination; and a rise in pervasive surveillance that, in the case of policing, can disproportionately affect Black people and disadvantaged socioeconomic groups.

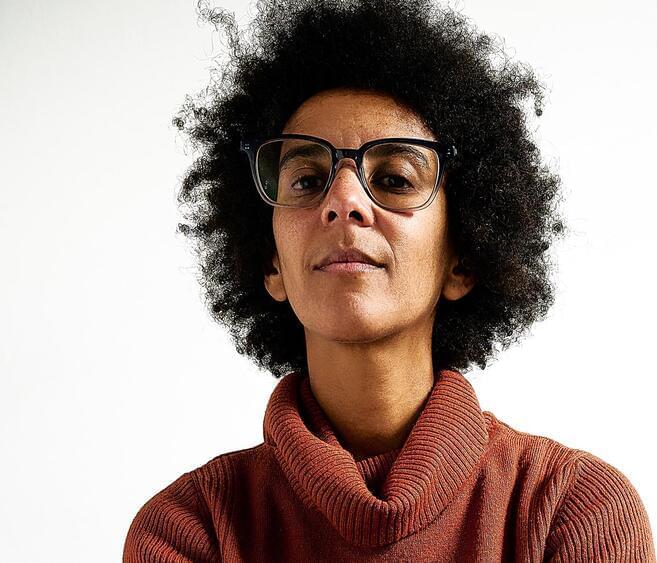

Gebru is a leading figure in a constellation of scholars, activists, regulators, and technologists collaborating to reshape ideas about what AI is and what it should be. Some of her fellow travelers remain in Big Tech, mobilizing those insights to push companies toward AI that is more ethical. Others, making policy on both sides of the Atlantic, are preparing new rules to set clearer limits on the companies benefiting most from automated abuses of power. Gebru herself is seeking to push the AI world beyond the binary of asking whether systems are biased and to instead focus on power: who’s building AI, who benefits from it, and who gets to decide what its future looks like.

Full Story:

The day after our Zoom call, on the anniversary of her departure from Google, Gebru launched the Distributed AI Research (DAIR) Institute, an independent research group she hopes will grapple with how to make AI work for everyone. “We need to let people who are harmed by technology imagine the future that they want,” she says.

When Gebru was a teenager, war broke out between Ethiopia, where she had lived all her life, and Eritrea, where both of her parents were born. It became unsafe for her to remain in Addis Ababa, the Ethiopian capital. After a “miserable” experience with the U.S. asylum system, Gebru finally made it to Massachusetts as a refugee. Immediately, she began experiencing racism in the American school system, where even as a high-achieving teenager she says some teachers discriminated against her, trying to prevent her taking certain AP classes. Years later, it was a pivotal experience with the police that put her on the path toward ethical technology. She recalls calling the cops after her friend, a Black woman, was assaulted in a bar. When they arrived, the police handcuffed Gebru’s friend and later put her in a cell. The assault was never filed, she says. “It was a blatant example of systemic racism.”

Comments are closed.