Everyone is in a big hurry to get the latest and greatest GPU accelerators to build generative AI platforms. Those who can’t get GPUs, or have custom devices that are better suited to their workloads than GPUs, deploy other kinds of accelerators.

The companies designing these AI compute engines have two things in common. First, they are all using Taiwan Semiconductor Manufacturing Co as their chip etching foundry, and many are using TSMC as their socket packager. And second, they have not lost their minds. With the devices launched so far this year, AI compute engine designers are hanging back a bit rather than try to be on the bleeding edge of process and packaging technology so they can make a little money on products and processes that were very expensive to develop.

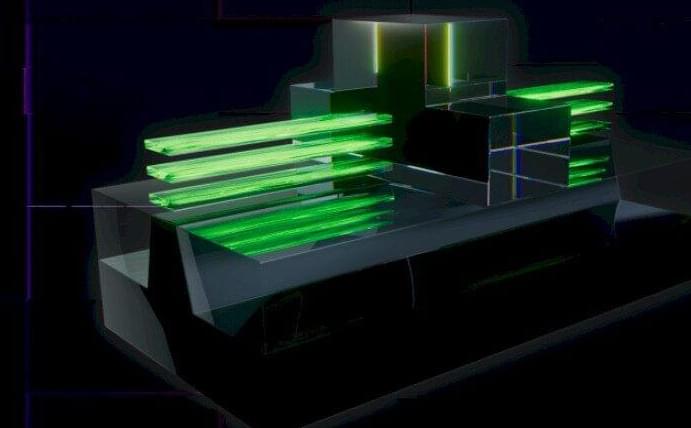

Nothing shows this better than the fact that the future “Blackwell” B100 and B200 GPU accelerators from Nvidia, which are not even going to start shipping until later this year, are based on the N4P process at Taiwan Semiconductor Manufacturing Co. This is a refined variant of the N4 process that the prior generation of “Hopper” H100 and H200 GPUs used, also a 4 nanometer product.

Comments are closed.