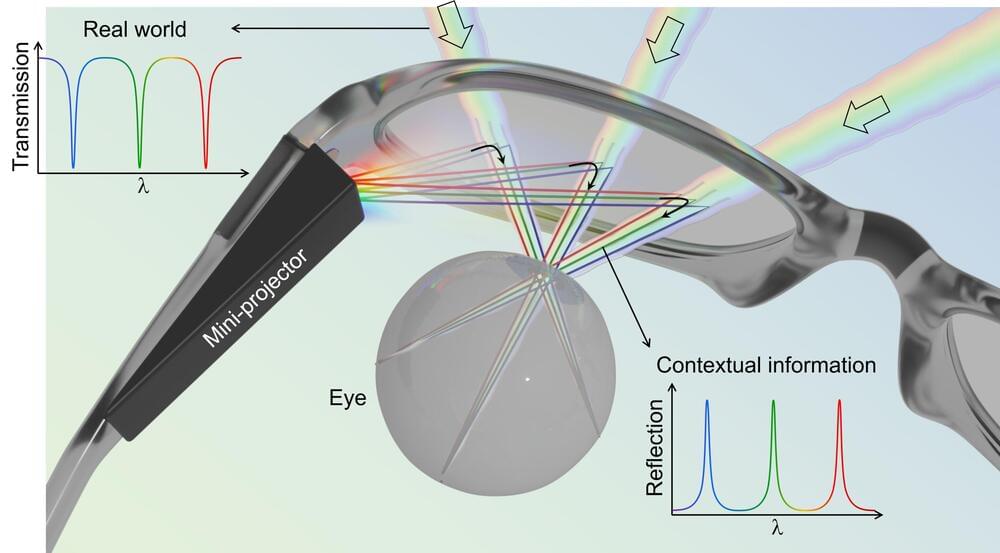

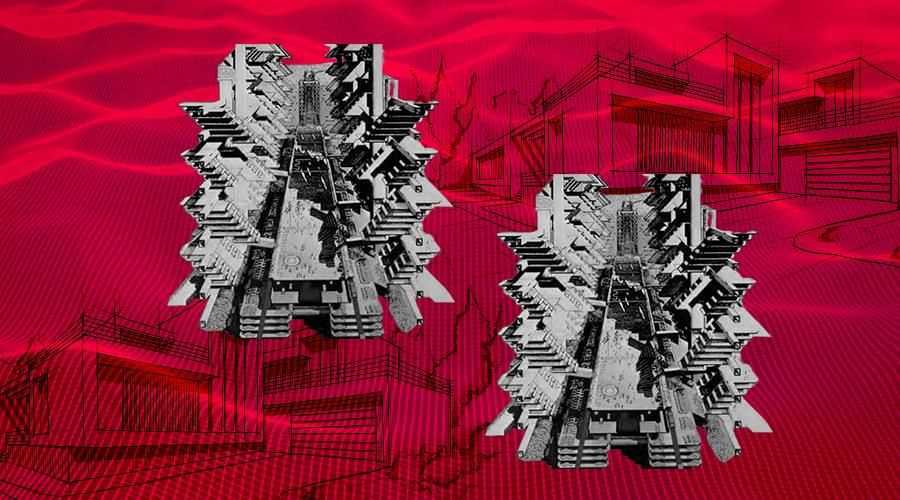

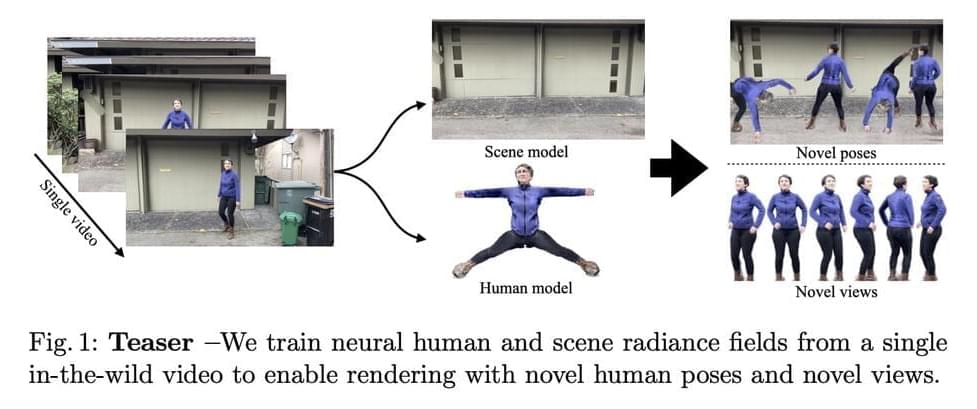

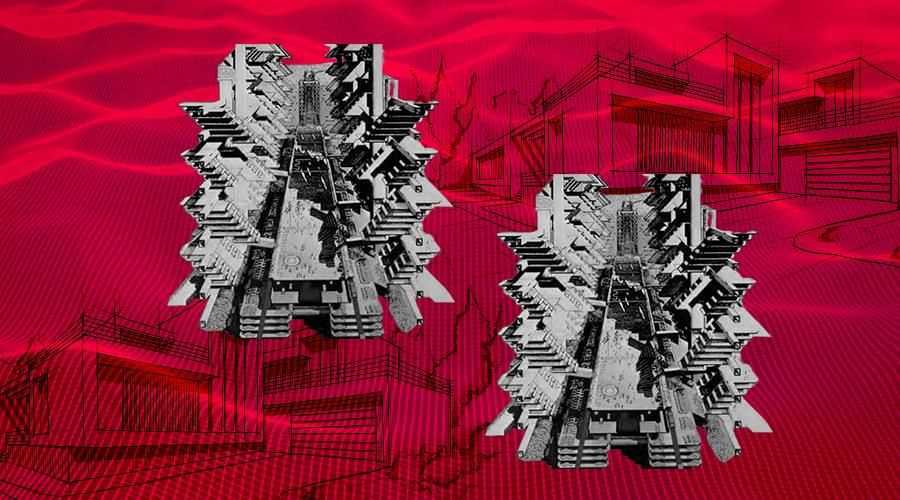

Those who are venturing into the architecture of the metaverse, have already asked themselves this question. A playful environment where all formal dreams are possible, where determining aspects for architecture such as solar orientation, ventilation, and climate will no longer be necessary, where – to Louis Kahn’s despair – there is no longer a dynamic of light and shadow, just an open and infinite field. Metaverse is the extension of various technologies, or even some call them a combination of some powerful technologies. These technologies are augmented reality, virtual reality, mixed reality, artificial intelligence, blockchain, and a 3D world.

This technology is still under research. However, the metaverse seems to make a significant difference in the education domain. Also, its feature of connecting students across the world with a single metaverse platform may bring a positive change. But, the metaverse is not only about remote learning. It is much more than that.

Architecture emerged on the construction site, at a time when there was no drawing, only experimentation. Over time, thanks to Brunelleschi and the Florence dome in the 15th century, we witnessed the first detachment from masonry, a social division of labor from which liberal art and mechanical art emerge. This detachment generated different challenges and placed architecture on an oneiric plane, tied to paper. In other words, we don’t build any structures, we design them. Now, six centuries later, it looks like we are getting ready to take another step away from the construction site, abruptly distancing ourselves from engineering and construction.