The hand could lead to advanced prosthetic hands for amputees or robots with the dexterity and strength to perform household task.

Category: robotics/AI

Wriggling robot worms team up to crawl up walls and cross obstacles

The slimy, segmented, bottom-dwelling California blackworm is about as unappealing as it gets—but get a few dozen or thousand together, and they form a massive, entangled blob that seems to take on a life of its own.

It may be the stuff of nightmares, but it is also the inspiration for a new kind of robot. “We look at the biological system, and we say, ‘Look how cool this is,’” said Senior Research Fellow Justin Werfel, who heads the Designing Emergence Laboratory at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS). Werfel is hooked on creating a robotic platform that’s inspired by a wriggling ball of blackworms and that, like the worms, can accomplish more as a group than as individuals.

Recently garnering a Best Paper on Mechanisms and Design award at the IEEE International Conference on Robotics and Automation, the Harvard team’s blackworm-inspired robotic platform consists of soft, thin, worm-like threads made out of synthetic polymer materials that can quickly tangle together and untangle.

What is the Church-Turing Thesis?

Modern-day computers have proved to be quite powerful in what they can do. The rise of AI has made things we previously only imagined possible. And the rate at which computers are increasing their computational power certainly makes it seem like we will be able to do almost anything with them. But as we’ve seen before, there are fundamental limits to what computers can do regardless of the processors or algorithms they use. This naturally leads us to ask what computers are capable of doing at their best and what their limits are. Which requires formalizing various definitions in computing.

This is exactly what happened in the early 20th century. Logicians & mathematicians were trying to formalize the foundations of mathematics through logic. One famous challenge based on this was the Entscheidungsproblem posed by David Hilbert and Wilhelm Ackermann. The problem asked if there exists an algorithm that can verify whether any mathematical statement is true or false based on provided axioms. Such an algorithm could be used to verify if any mathematical system is internally consistent. Kurt Gödel proved in 1931 that this problem could not be answered one way or the other through his incompleteness theorems.

Years later, Alan Turing and Alonzo Church proved the same through separate, independent means. Turing did so by developing Turing machines (called automatic machines at the time) and the Halting problem. Church did so by developing lambda calculus. Later on, it was proved that Turing machines and lambda calculus are mathematically equivalent. This led many mathematicians to theorize that computability could be defined by either of these systems. That in turn caused Turing and Church to make their thesis: every effectively calculable function is a computable function. In simpler terms, it states that any computation from any model can be carried out by a Turing machine or lambda calculus. To better understand the implications of the Church-Turing thesis, we need to explore the different kinds of computational machines.

AI Is Heading For an Energy Crisis That Has Tech Giants Scrambling

The artificial intelligence industry is scrambling to reduce its massive energy consumption through better cooling systems, more efficient computer chips, and smarter programming – all while AI usage explodes worldwide.

AI depends entirely on data centers, which could consume three percent of the world’s electricity by 2030, according to the International Energy Agency. That’s double what they use today.

Experts at McKinsey, a US consulting firm, describe a race to build enough data centers to keep up with AI’s rapid growth, while warning that the world is heading toward an electricity shortage.

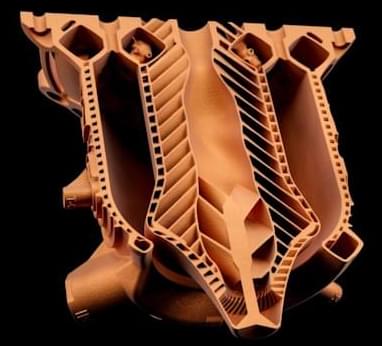

This aerospike rocket engine designed by generative AI just completed its first hot fire test

The reason these aerospike style engines haven’t been used more in the past is they’re difficult to design and make. While generally thought of as having the potential to be more efficient, they also require intricate cooling channels to help keep the spike cool. CEO and Co-Founder of LEAP 71, Josefine Lissner, credits the company’s computational AI, Noyron, with the ability to make these advancements.

“We were able to extend Noyron’s physics to deal with the unique complexity of this engine type. The spike is cooled by intricate cooling channels flooded by cryogenic oxygen, whereas the outside of the chamber is cooled by the kerosene fuel.” Said Lissner “I am very encouraged by the results of this test, as virtually everything on the engine was novel and untested. It’s a great validation of our physics-driven approach to computational AI.”

Lin Kayser, Co-Founder of LEAP 71, also believes the AI was paramount in achieving the complex design, explaining “Despite their clear advantages, Aerospikes are not used in space access today. We want to change that. Noyron allows us to radically cut the time we need to re-engineer and iterate after a test and enables us to converge rapidly on an optimal design.”

NVIDIA CEO Jensen Huang Goes Against MIT Research & Says AI Makes Him Smarter

In an interview with CNN’s Fareed Zakaria, NVIDIA CEO Jensen Huang has denied using AI makes him less smart. Huang’s remarks came in response to an MIT study last month, which shared that users who relied on AI for writing essays demonstrated less brain activity across regions of the brain and were unable to use unique thinking at the end. However, Huang, who shared that he had not come across this study, commented that using AI actually improved his cognitive skills.

The gist of MIT’s study indicated that users who relied on ChatGPT to write their essays ended up with lower brain activity after their third attempt. The researchers outlined that while ChatGPT essay writers did initially structure their essays and based their questions to the model on the structure, at their final attempt, they ended up simply copying and pasting the model’s responses. Additionally, the ChatGPT users were also unable to recall their work and “consistently underperformed at neural, linguistic, and behavioral levels.”

After CNN’s Fareed Zakaria questioned Huang about the study and asked him what he thought of it, the executive replied by sharing that he hadn’t “looked at that research yet.” However, Huang still disagreed with the research’s conclusions. “I have to admit, I’m using AI literally every single day. And, and, um, I think my cognitive skills are actually advancing,” he said. Commenting further, the NVIDIA added that the reason behind his skills improving was because he wasn’t asking the model to think for him. Instead, “I’m asking it to teach me things that I don’t know. Or help me solve problems that otherwise wouldn’t be able to solve reasonably,” he shared.

Tesla’s FSD Advantage Just Became Critical

Tesla’s technological advancements and strategic investments in autonomous driving, particularly in its Full Self-Driving technology, are giving the company a critical and potentially insurmountable lead in the industry ## Questions to inspire discussion.

Tesla’s AI and autonomous driving advancements.

🚗 Q: When will Tesla’s Dojo 2 supercomputer start mass production? A: Tesla’s Dojo 2 supercomputer is set to begin mass production by the end of 2025, providing a significant advantage in autonomous driving and AI development.

🧠 Q: How does Tesla’s AI system Grok compare to other AI? A: According to Jeff Lutz, Tesla’s AI system Grok is now the smartest AI in the world and will continue to improve with synthetic data training.

🚕 Q: What advantages does Tesla have in autonomous driving development? A: Tesla’s Full Self-Driving (FSD) technology allows the company to collect and use real-world data for AI model training, giving it a significant edge over competitors relying on simulated or internet data.

Tesla’s Operational Excellence.

Why I Am MUCH LESS Concerned About Tesla Robotaxi Now (Waymo Disasters)

Questions to inspire discussion.

📈 Q: How has Waymo’s crash rate changed over time? A: Waymo’s crash rate increased 8x from 10 to 80 per deployed vehicle between 2024 and 2025, despite only a 2-6x increase in fleet size, indicating a potential decrease in safety.

Operational Insights.

🤖 Q: What proportion of Waymo crashes involved fully autonomous vehicles? A: 521 out of 696 crashes (74.9%) involved fully autonomous vehicles without safety operators, while 167 had an onboard safety operator and 5 had a remote operator.

Market Expansion.

🌎 Q: How has Waymo’s expansion affected its safety record? A: Waymo’s aggressive scaling into new markets like Georgia and Austin, in response to Tesla’s growth, may be contributing to the higher crash rate beyond the increase in deployment rate.

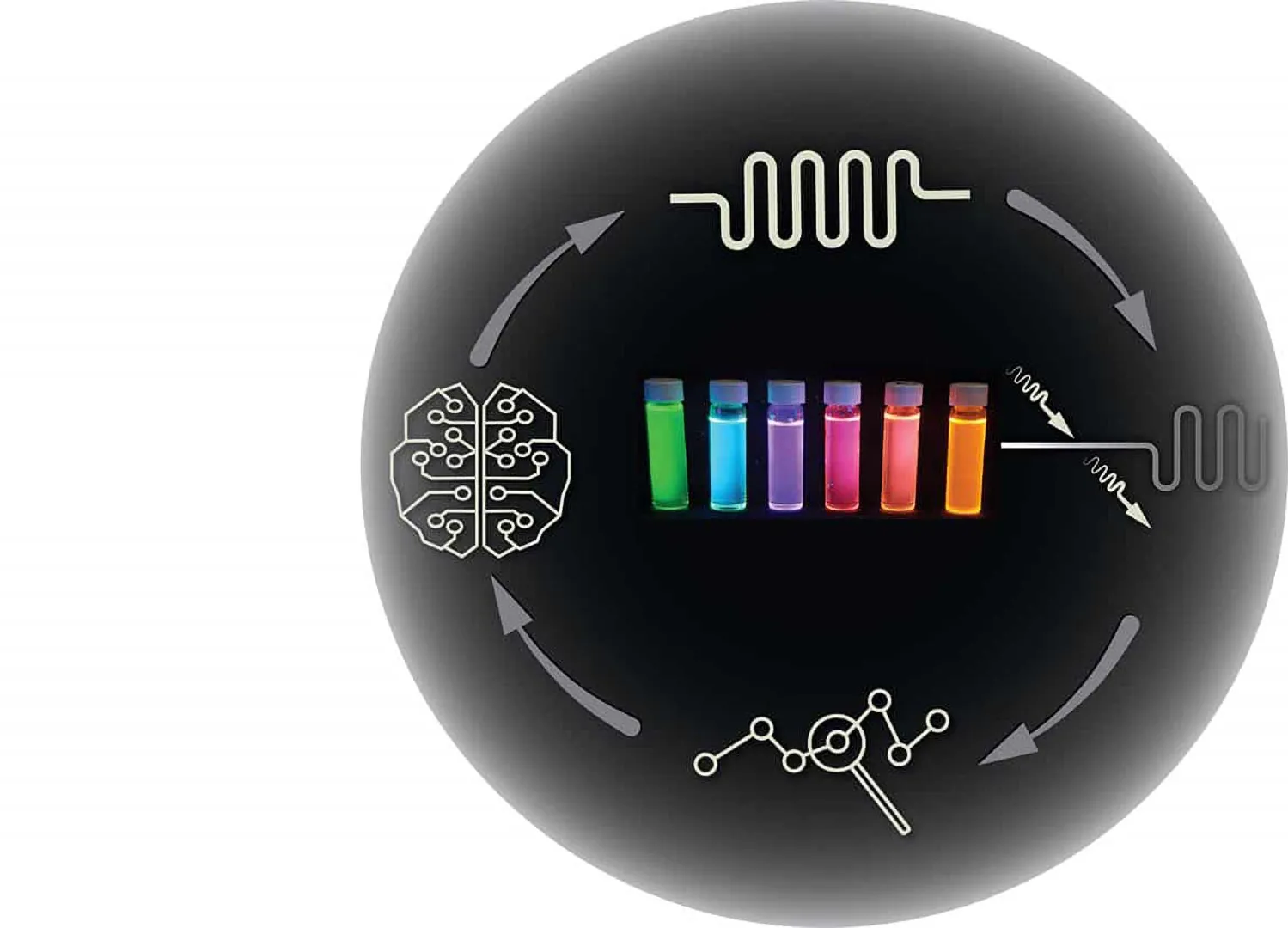

This AI-powered lab runs itself—and discovers new materials 10x faster

A new leap in lab automation is shaking up how scientists discover materials. By switching from slow, traditional methods to real-time, dynamic chemical experiments, researchers have created a self-driving lab that collects 10 times more data, drastically accelerating progress. This new system not only saves time and resources but also paves the way for faster breakthroughs in clean energy, electronics, and sustainability—bringing us closer to a future where lab discoveries happen in days, not years.