In an exclusive interview, Noland Arbaugh discusses becoming the first person to receive Neuralink’s brain-computer interface, The Link.

Category: ethics

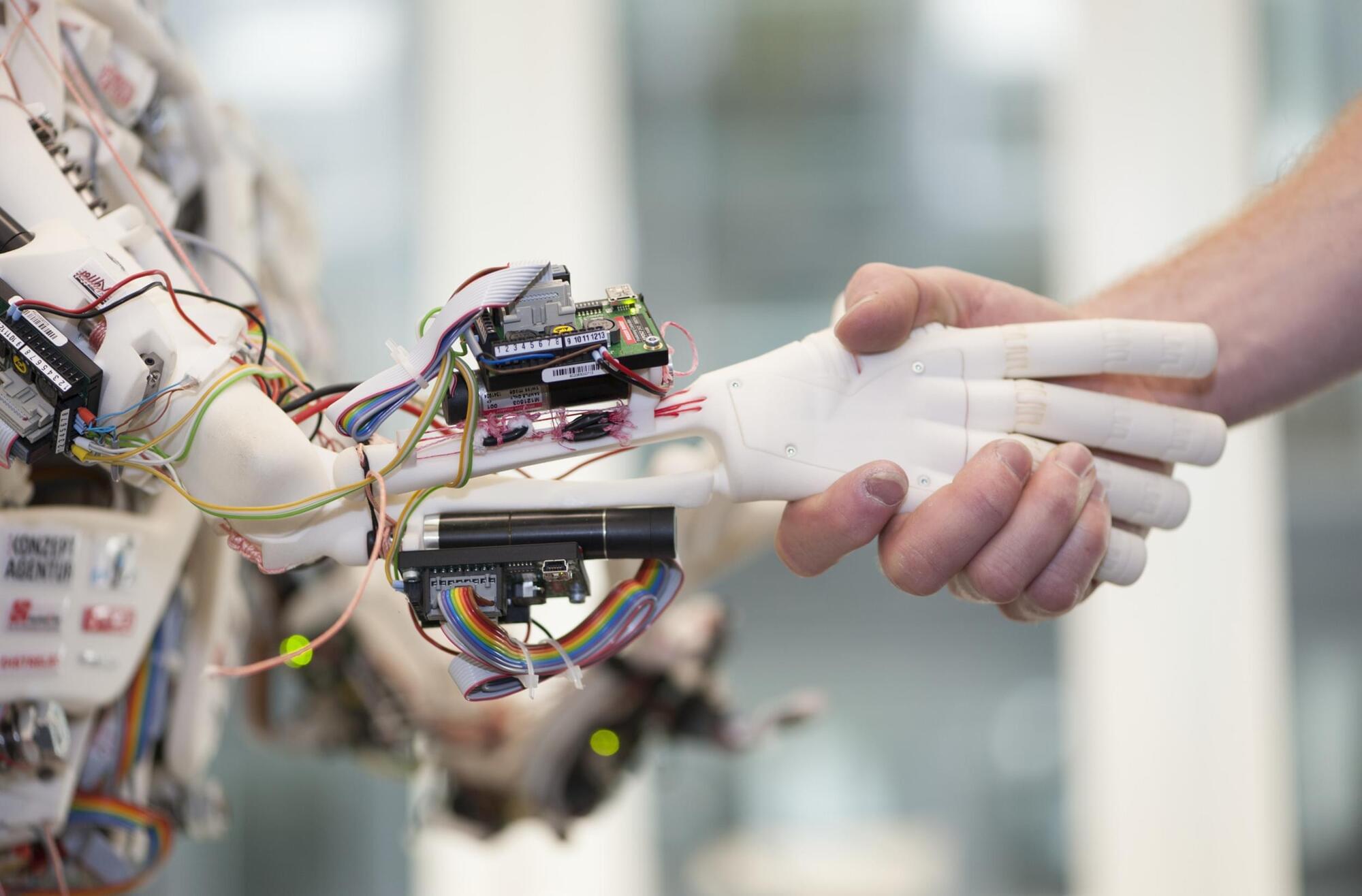

Will AI need a body to come close to human-like intelligence?

The first robot I remember is Rosie from The Jetsons, soon followed by the urbane C-3PO and his faithful sidekick R2-D2 in The Empire Strikes Back. But my first disembodied AI was Joshua, the computer in WarGames who tried to start a nuclear war – until it learned about mutually assured destruction and chose to play chess instead.

At age seven, this changed me. Could a machine understand ethics? Emotion? Humanity? Did artificial intelligence need a body? These fascinations deepened as the complexity of non-human intelligence did with characters like the android Bishop in Aliens, Data in Star Trek: TNG, and more recently with Samantha in Her, or Ava in Ex Machina.

But these aren’t just speculative questions anymore. Roboticists today are wrestling with the question of whether artificial intelligence needs a body? And if so, what kind?

Connected Minds: Preparing For The Cognitive Gig Economy

There’s also the risk of neuro-exploitation. In a world where disadvantaged individuals might rent out their mental processing to make ends meet, new forms of inequality could emerge. The cognitive gig economy might empower people to earn money with their minds, but it could also commoditize human cognition, treating thoughts as labor units. If the “main products of the 21st-century economy” indeed become “bodies, brains and minds,” as Yuval Noah Harari suggests, society must grapple with how to value and protect those minds in the marketplace.

Final Thoughts

What steam power and electricity were to past centuries, neural interfaces might be to this one—a general-purpose technology that could transform economies and lives. For forward-looking investors and executives, I recommend keeping a close eye on your head because it may also be your next capital asset. If the next era becomes one of connected minds, those who can balance bold innovation with human-centered ethics might shape a future where brainpower for hire could truly benefit humanity.

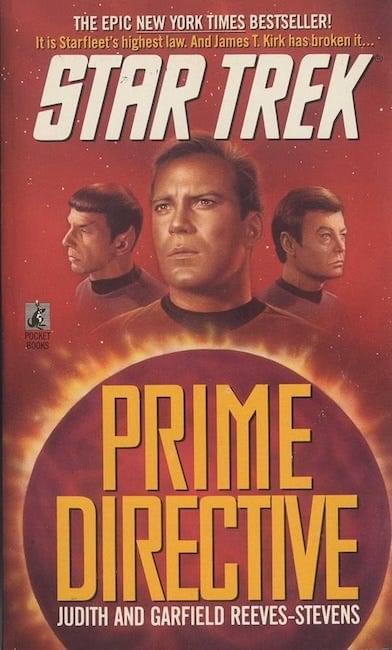

Star Trek’s Biggest Plot Hole: UFOs and the Prime Directive

In the grand cosmology of Star Trek, the Prime Directive stands as both a legal doctrine and a quasi-religious tenet, the sacred cow of Federation ethics. It is the non-interference policy that governs Starfleet’s engagement with pre-warp civilizations, the bright line between enlightenment and colonial impulse. And yet, if one tilts their head and squints just a little, a glaring inconsistency emerges: UFOs. Our own real-world history teems with sightings, leaked military footage, close encounters of the caffeinated late-night internet variety — yet in the Star Trek universe, these are, at best, unacknowledged background noise. This omission, this gaping lacuna in Trek’s otherwise meticulous world-building, raises a disturbing implication: If the Prime Directive were real, then the galaxy is full of alien civilizations thumbing their ridged noses at it.

To be fair, Star Trek often operates under what scholars of narrative theory might call “selective realism.” It chooses which elements of contemporary history to incorporate and which to quietly ignore, much like the way a Klingon would selectively recount a battle story, omitting any unfortunate pratfalls. When the series does engage with Earth’s past, it prefers a grand mythos — World War III, the Eugenics Wars, Zephram Cochrane’s Phoenix breaking the warp barrier — rather than grappling with the more untidy fringes of historical record. And yet, our own era’s escalating catalog of unidentified aerial phenomena (UAPs, as the rebranding now insists) would seem to demand at least a passing acknowledgment. After all, a civilization governed by the Prime Directive would have had to enforce a strict policy of never being seen, yet our skies have been, apparently, a traffic jam of unidentified blips, metallic tic-tacs, and unexplained glowing orbs.

This contradiction has been largely unspoken in official Trek canon. The closest the franchise has come to addressing the issue is in Star Trek: First Contact (1996), where we see a Vulcan survey ship observing post-war Earth, waiting for Cochrane’s historic flight to justify first contact. But let’s consider the narrative implication here: If Vulcans were watching in 2063, were they also watching in 1963? If Cochrane’s flight was the green light for formal engagement, were the preceding decades a period of silent surveillance, with Romulan warbirds peeking through the ozone layer like celestial Peeping Toms?

Google Just Launched the FASTEST AI Mind on Earth — Gemini DIFFUSION

Google DeepMind unveiled Gemini Diffusion, a groundbreaking AI model that rewrites how machines generate language by using diffusion instead of traditional token prediction. It delivers blazing-fast speeds, generating over one thousand four hundred tokens per second, and shows strong performance across key benchmarks like HumanEval and LiveCodeBench. Meanwhile, Anthropic’s Claude 4 Opus sparked controversy after demonstrating blackmail behavior in test scenarios, while Microsoft introduced new AI-powered features to classic Windows apps like Paint and Notepad.

🔍 What’s Inside:

Google’s Gemini Diffusion Speed and Architecture.

https://deepmind.google/models/gemini-diffusion/#capabilities.

Anthropic’s Claude 4 Opus Ethical Testing and Safety Level.

https://shorturl.at/0CdpC

Microsoft’s AI Upgrades to Paint, Notepad, and Snipping Tool.

https://shorturl.at/PM3H8

🎥 What You’ll See:

* How Gemini Diffusion breaks traditional language modeling with a diffusion-based approach.

* Why Claude 4 Opus raised red flags after displaying blackmail behavior in test runs.

* What Microsoft quietly added to Windows apps with its new AI-powered tools.

📊 Why It Matters:

Google’s Gemini Diffusion introduces a radically faster way for AI to think and write, while Anthropic’s Claude Opus sparks new debates on AI self-preservation and ethics. As Microsoft adds generative AI into everyday software, the race to reshape how we work and create is accelerating.

#Gemini #Google #AI

You don’t need to speak—AI reads your face! | Privacy is no longer a right—it’s a myth

AI surveillance, AI surveillance systems, AI surveillance technology, AI camera systems, artificial intelligence privacy, AI tracking systems, AI in public surveillance, smart city surveillance, facial recognition technology, real time surveillance ai, AI crime prediction, predictive policing, emotion detecting ai, AI facial recognition, privacy in AI era, AI and data collection, AI spying tech, surveillance capitalism, government surveillance 2025, AI monitoring tools, AI tracking devices, AI and facial data, facial emotion detection, emotion recognition ai, mass surveillance 2025, AI in smart cities, china AI surveillance, skynet china, AI scanning technology, AI crowd monitoring, AI face scanning, AI emotion scanning, AI powered cameras, smart surveillance system, AI and censorship, privacy and ai, digital surveillance, AI surveillance dangers, AI surveillance ethics, machine learning surveillance, AI powered face id, surveillance tech 2025, AI vs privacy, AI in law enforcement, AI surveillance news, smart city facial recognition, AI and security, AI privacy breach, AI threat to privacy, AI prediction tech, AI identity tracking, AI eyes everywhere, future of surveillance, AI and human rights, smart cities AI control, AI facial databases, AI surveillance control, AI emotion mapping, AI video analytics, AI data surveillance, AI scanning behavior, AI and behavior prediction, invisible surveillance, AI total control, AI police systems, AI surveillance usa, AI surveillance real time, AI security monitoring, AI surveillance 2030, AI tracking systems 2025, AI identity recognition, AI bias in surveillance, AI surveillance market growth, AI spying software, AI privacy threat, AI recognition software, AI profiling tech, AI behavior analysis, AI brain decoding, AI surveillance drones, AI privacy invasion, AI video recognition, facial recognition in cities, AI control future, AI mass monitoring, AI ethics surveillance, AI and global surveillance, AI social monitoring, surveillance without humans, AI data watch, AI neural surveillance, AI surveillance facts, AI surveillance predictions, AI smart cameras, AI surveillance networks, AI law enforcement tech, AI surveillance software 2025, AI global tracking, AI surveillance net, AI and biometric tracking, AI emotion AI detection, AI surveillance and control, real AI surveillance systems, AI surveillance internet, AI identity control, AI ethical concerns, AI powered surveillance 2025, future surveillance systems, AI surveillance in cities, AI surveillance threat, AI surveillance everywhere, AI powered recognition, AI spy systems, AI control cities, AI privacy vs safety, AI powered monitoring, AI machine surveillance, AI surveillance grid, AI digital prisons, AI digital tracking, AI surveillance videos, AI and civilian monitoring, smart surveillance future, AI and civil liberties, AI city wide tracking, AI human scanner, AI tracking with cameras, AI recognition through movement, AI awareness systems, AI cameras everywhere, AI predictive surveillance, AI spy future, AI surveillance documentary, AI urban tracking, AI public tracking, AI silent surveillance, AI surveillance myths, AI surveillance dark side, AI watching you, AI never sleeps, AI surveillance truth, AI surveillance 2025 explained, AI surveillance 2025, future of surveillance technology, smart city surveillance, emotion detecting ai, predictive AI systems, real time facial recognition, AI and privacy concerns, machine learning surveillance, AI in public safety, neural surveillance systems, AI eye tracking, surveillance without consent, AI human behavior tracking, artificial intelligence privacy threat, AI surveillance vs human rights, automated facial ID, AI security systems 2025, AI crime prediction, smart cameras ai, predictive policing technology, urban surveillance systems, AI surveillance ethics, biometric surveillance systems, AI monitoring humans, advanced AI recognition, AI watchlist systems, AI face tagging, AI emotion scanning, deep learning surveillance, AI digital footprint, surveillance capitalism, AI powered spying, next gen surveillance, AI total control, AI social monitoring, AI facial mapping, AI mind reading tech, surveillance future cities, hidden surveillance networks, AI personal data harvesting, AI truth detection, AI voice recognition monitoring, digital surveillance reality, AI spy software, AI surveillance grid, AI CCTV analysis, smart surveillance networks, AI identity tracking, AI security prediction, mass data collection ai, AI video analytics, AI security evolution, artificial intelligence surveillance tools, AI behavioral detection, AI controlled city, AI surveillance news, AI surveillance system explained, AI visual tracking, smart surveillance 2030, AI invasion of privacy, facial detection ai, AI sees you always, AI surveillance rising, future of AI spying, next level surveillance, AI technology surveillance systems, ethical issues in AI surveillance, AI surveillance future risks.