Aug 17, 2024

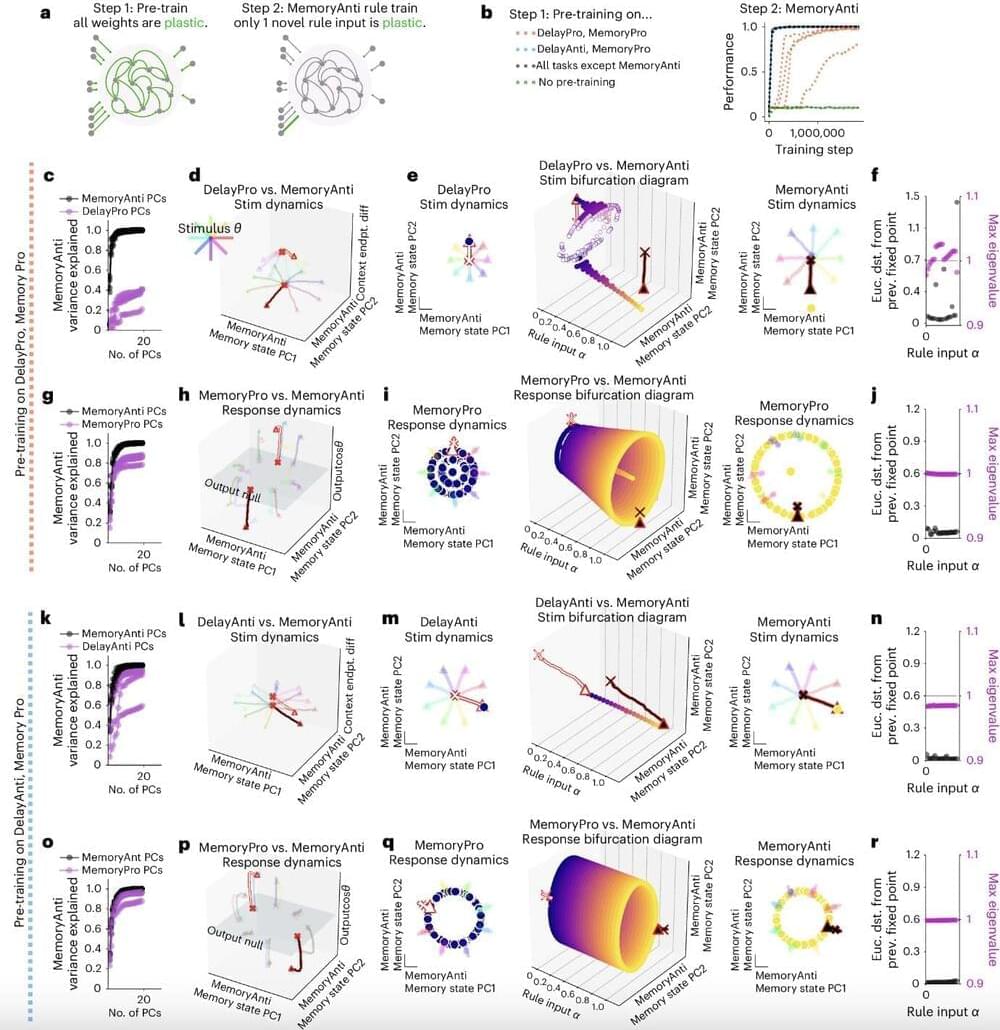

Flexible multi-task computation in recurrent neural networks relies on dynamical motifs, study shows

Posted by Dan Breeden in categories: biological, robotics/AI

Cognitive flexibility, the ability to rapidly switch between different thoughts and mental concepts, is a highly advantageous human capability. This salient capability supports multi-tasking, the rapid acquisition of new skills and the adaptation to new situations.

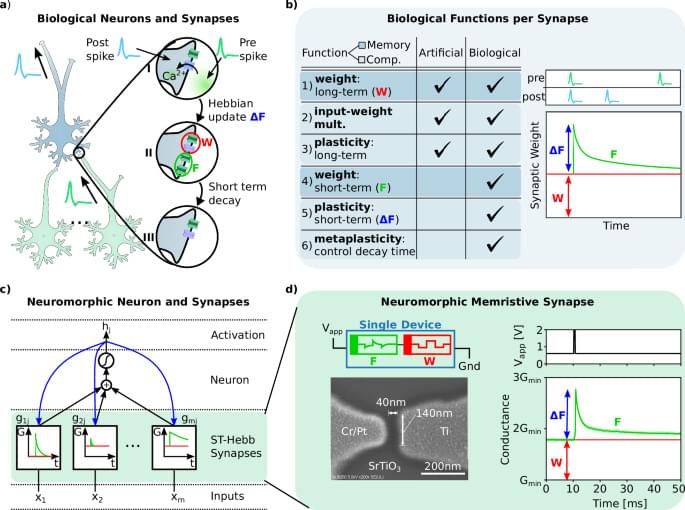

While artificial intelligence (AI) systems have become increasingly advanced over the past few decades, they currently do not exhibit the same flexibility as humans in learning new skills and switching between tasks. A better understanding of how biological neural circuits support cognitive flexibility, particularly how they support multi-tasking, could inform future efforts aimed at developing more flexible AI.

Recently, some computer scientists and neuroscientists have been studying neural computations using artificial neural networks. Most of these networks, however, were generally trained to tackle specific tasks individually as opposed to multiple tasks.