Apr 17, 2024

Quantum electronics: Charge travels like light in bilayer graphene

Posted by Shailesh Prasad in categories: computing, nanotechnology, particle physics, quantum physics

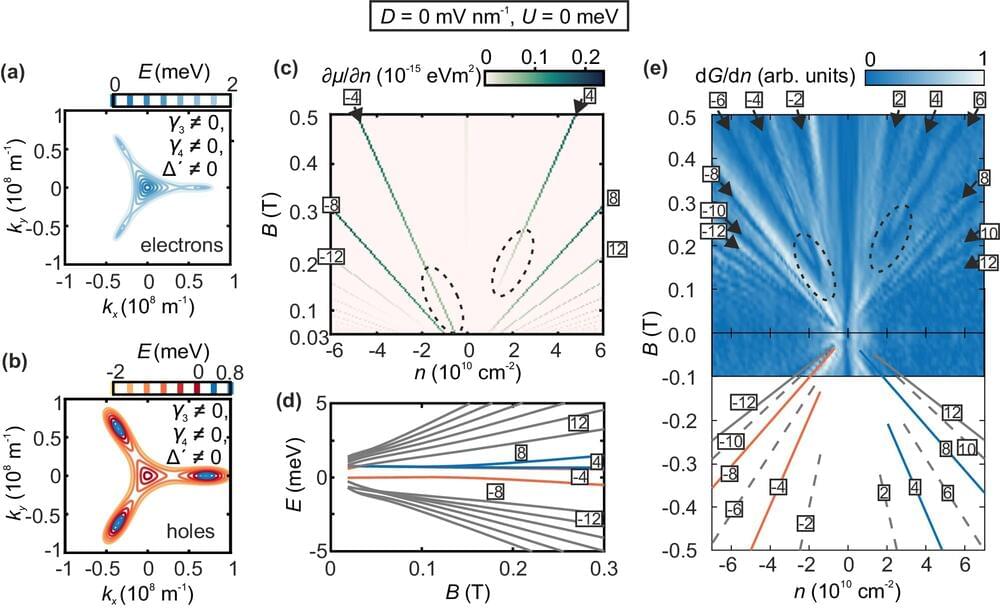

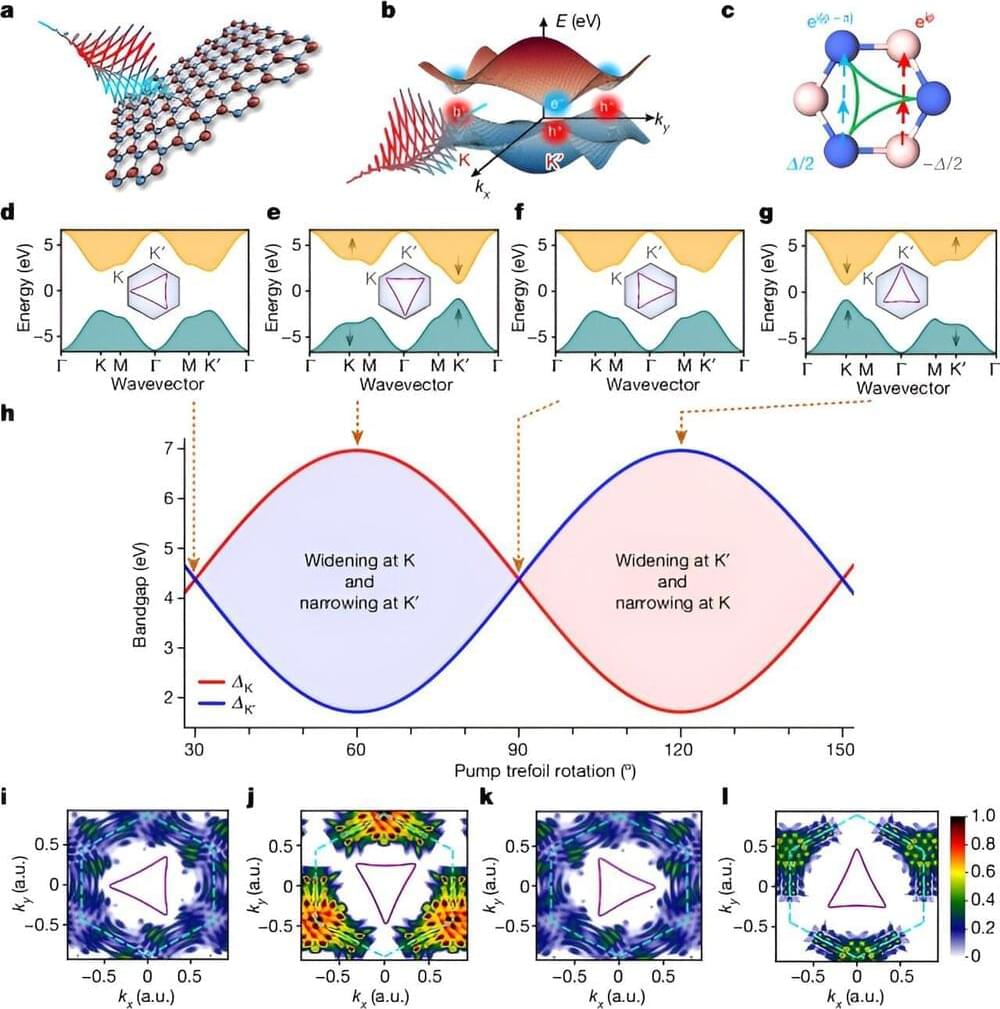

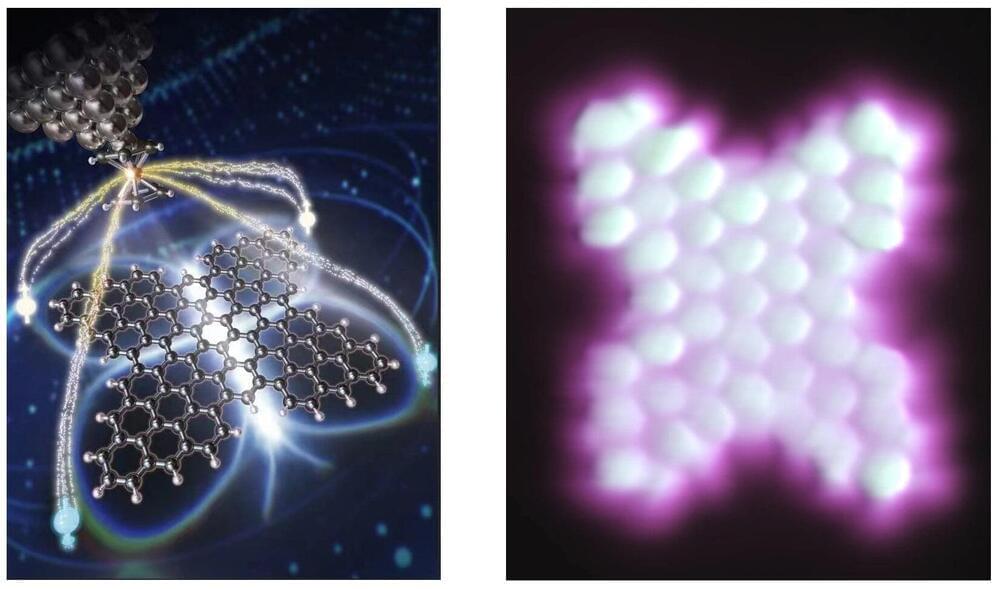

An international research team led by the University of Göttingen has demonstrated experimentally that electrons in naturally occurring double-layer graphene move like particles without any mass, in the same way that light travels. Furthermore, they have shown that the current can be “switched” on and off, which has potential for developing tiny, energy-efficient transistors—like the light switch in your house but at a nanoscale.