Feb 18, 2016

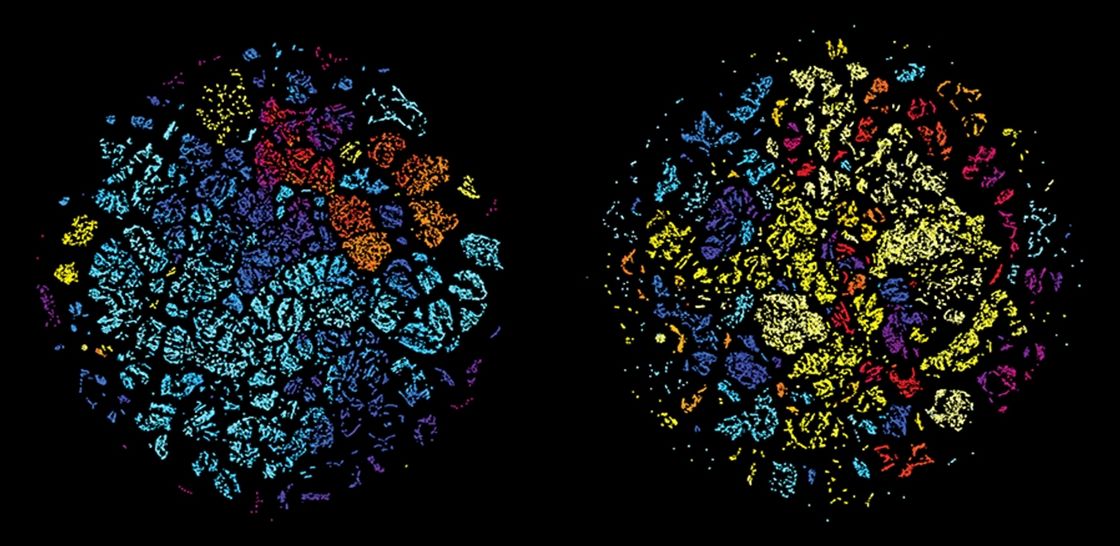

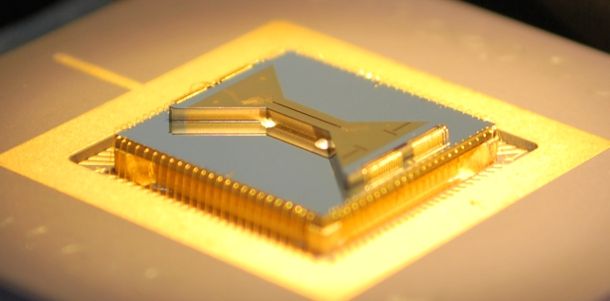

Brain scan for artificial intelligence shows how software thinks

Posted by Shailesh Prasad in categories: biotech/medical, computing, information science, robotics/AI

Neural networks have become enormously successful – but we often don’t know how or why they work. Now, computer scientists are starting to peer inside their artificial minds.

A PENNY for ’em? Knowing what someone is thinking is crucial for understanding their behaviour. It’s the same with artificial intelligences. A new technique for taking snapshots of neural networks as they crunch through a problem will help us fathom how they work, leading to AIs that work better – and are more trustworthy.

In the last few years, deep-learning algorithms built on neural networks – multiple layers of interconnected artificial neurons – have driven breakthroughs in many areas of artificial intelligence, including natural language processing, image recognition, medical diagnoses and beating a professional human player at the game Go.

Continue reading “Brain scan for artificial intelligence shows how software thinks” »