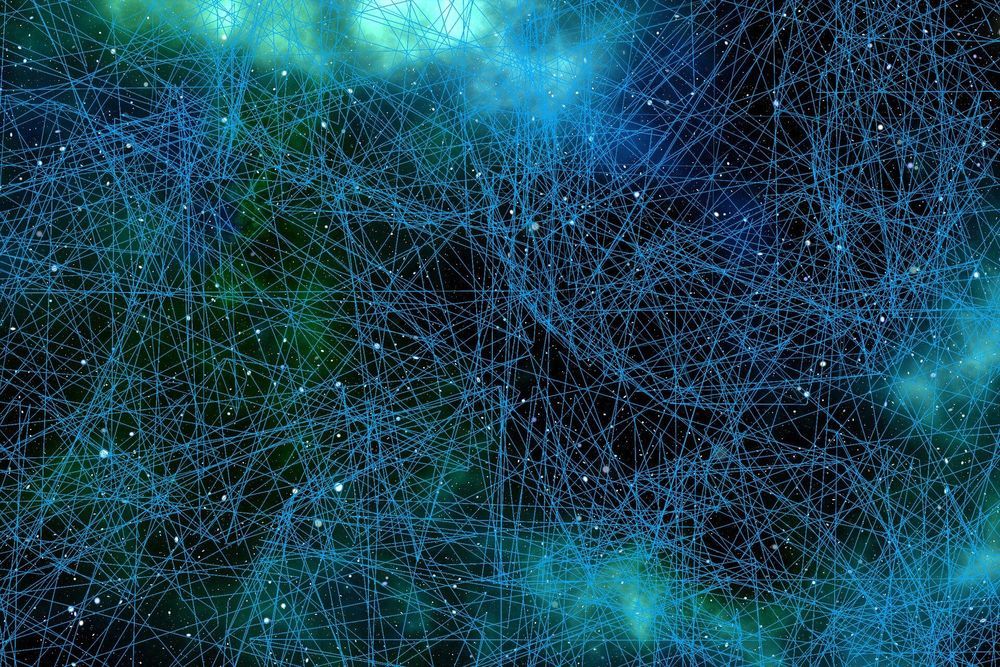

Accelerating progress in neuroscience is helping us understand the big picture—how animals behave and which brain areas are involved in bringing about these behaviors—and also the small picture—how molecules, neurons, and synapses interact. But there is a huge gap of knowledge between these two scales, from the whole brain down to the neuron.

A team led by Christos Papadimitriou, the Donovan Family Professor of Computer Science at Columbia Engineering, proposes a new computational system to expand the understanding of the brain at an intermediate level, between neurons and cognitive phenomena such as language. The group, which includes computer scientists from Georgia Institute of Technology and a neuroscientist from the Graz University of Technology, has developed a brain architecture that is based on neuronal assemblies, and they demonstrate its use in the syntactic processing in the production of language; their model, published online June 9 in PNAS, is consistent with recent experimental results.

“For me, understanding the brain has always been a computational problem,” says Papadimitriou, who became fascinated by the brain five years ago. “Because if it isn’t, I don’t know where to start.”