On March 11, 2011, a 9.1-magnitude earthquake triggered a powerful tsunami, generating waves higher than 125 feet that ravaged the coast of Japan, particularly the Tohoku region of Honshu, the largest and most populous island in the country.nnNearly 16,000 people were killed, hundreds of thousands displaced, and millions left without electricity and water. Railways and roads were destroyed, and 383,000 buildings damaged—including a nuclear power plant that suffered a meltdown of three reactors, prompting widespread evacuations.nnIn lessons for today’s businesses deeply hit by pandemic and seismic culture shifts, it’s important to recognize that many of the Japanese companies in the Tohoku region continue to operate today, despite facing serious financial setbacks from the disaster. How did these businesses manage not only to survive, but thrive?nnOne reason, says Harvard Business School professor Hirotaka Takeuchi, was their dedication to responding to the needs of employees and the community first, all with the moral purpose of serving the common good. Less important for these companies, he says, was pursuing layoffs and other cost-cutting measures in the face of a crippled economy.nn

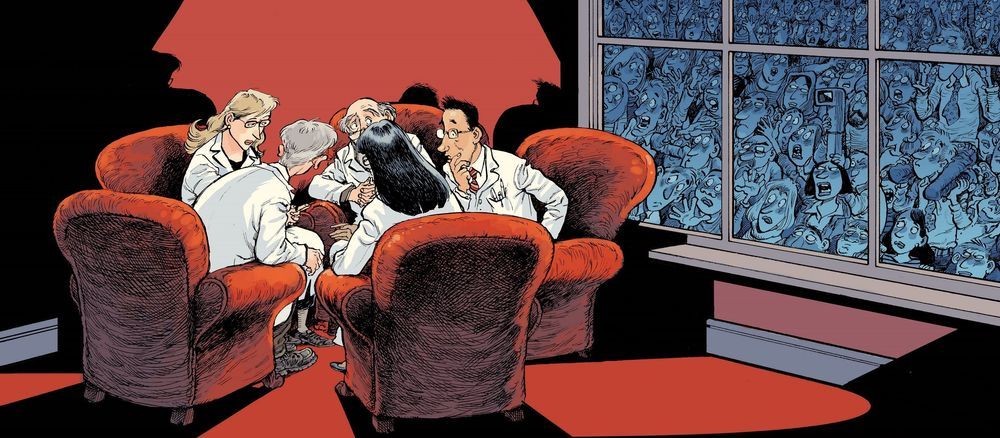

As demonstrated after the 2011 earthquake and tsunami, Japanese businesses have a unique capability for long-term survival. Hirotaka Takeuchi explains their strategy of investing in community over profits during turbulent times.