Frances Haugen’s testimony at the Senate hearing today raised serious questions about how Facebook’s algorithms work—and echoes many findings from our previous investigation.

Category: information science – Page 195

Proteins are structured like folded chains. These chains are composed of small units of 20 possible amino acids, each labeled by a letter of the alphabet. A protein chain can be represented as a string of these alphabetic letters, very much like a string of music notes in alphabetical notation.

Protein chains can also fold into wavy and curved patterns with ups, downs, turns, and loops. Likewise, music consists of sound waves of higher and lower pitches, with changing tempos and repeating motifs.

Protein-to-music algorithms can thus map the structural and physiochemical features of a string of amino acids onto the musical features of a string of notes.

When Dr. Robert Murphy first started researching biochemistry and drug development in the late 1970s, creating a pharmaceutical compound that was effective and safe to market followed a strict experimental pipeline that was beginning to be enhanced by large-scale data collection and analysis on a computer.

Now head of the Murphy Lab for computational biology at Carnegie Mellon University (CMU), Murphy has watched over the years as data collection and artificial intelligence have revolutionized this process, making the drug creation pipeline faster, more efficient, and more effective.

Recently, that’s been thanks to the application of machine learning—computer systems that learn and adapt by using algorithms and statistical models to analyze patterns in datasets—to the drug development process. This has been notably key to reducing the presence of side effects, Murphy says.

About six years ago, the CEO of Toyota Research Institute published a seminal paper about whether a Cambrian explosion was coming for robotics. The term “Cambrian explosion” refers to an important event approximately half a billion years ago in which there was a rapid expansion of different forms of life on earth. There are parallels with the field of robotics as modern technological advancements are fueling an analogous explosion in the diversification and applicability of robots. Today, we’re seeing this Cambrian explosion of robotics unfolding, and consequently, many distinct patterns are emerging. I’ll outline the top three trends that are rapidly evolving in the robotics space and that are most likely to dominate for years to come.

1. The Democratization Of AI And The Convergence Of Technologies.

The birth and proliferation of AI-powered robots are happening because of the democratization of AI. For example, open-source machine learning frameworks are now broadly accessible; AI algorithms are now in the open domain in cloud-based repositories like GitHub; and influential publications on deep learning from top schools can now be downloaded. We now have access to more computing power (e.g., Nvidia GPUs, Omniverse, etc.), data, cloud-computing platforms (e.g., Amazon AWS), new hardware and advanced engineering. Many robotics startup companies are capitalizing on this “super evolution” of technology to build more intelligent and more capable machines.

November 12 2020

RESEARCH TRIANGLE PARK, N.C. — A new machine learning algorithm, developed with Army funding, can isolate patterns in brain signals that relate to a specific behavior and then decode it, potentially providing Soldiers with behavioral-based feedback.

“The impact of this work is of great importance to Army and DOD in general, as it pursues a framework for decoding behaviors from brain signals that generate them,” said Dr. Hamid Krim, program manager, Army Research Office, an element of the U.S. Army Combat Capabilities Develop Command, now known as DEVCOM, Army Research Laboratory. “As an example future application, the algorithms could provide Soldiers with needed feedback to take corrective action as a result of fatigue or stress.”

Brain signals contain dynamic neural patterns that reflect a combination of activities simultaneously. For example, the brain can type a message on a keyboard and acknowledge if a person is thirsty at that same time. A standing challenge has been isolating those patterns in brain signals that relate to a specific behavior, such as finger movements.

In her March 7 public lecture at Perimeter Institute, Emily Levesque discusses the history of stellar astronomy, present-day observing techniques and exciting new discoveries, and explores some of the most puzzling and bizarre objects being studied by astronomers today.

Perimeter Institute (charitable registration number 88,981 4323 RR0001) is the world’s largest independent research hub devoted to theoretical physics, created to foster breakthroughs in the fundamental understanding of our universe, from the smallest particles to the entire cosmos. The Perimeter Institute Public Lecture Series is made possible in part by the support of donors like you. Be part of the equation: https://perimeterinstitute.ca/inspiring-and-educating-public.

Subscribe for updates on future live webcasts, events, free posters, and more: https://insidetheperimeter.ca/newsletter/

Facebook.com/pioutreach.

twitter.com/perimeter.

instagram.com/perimeterinstitute.

Donate: https://perimeterinstitute.ca/give-today

The killer product of robotics is hidden in plain sight and may be just a few years away. Summary The robotic space is still waiting for a truly widespread general purpose product which deeply changes our everyday life. I argue that such a product could be a low cost collaborative manipulator powered by an ecosystem of AI-based applications. It will create value in novel ways and automate tasks at an unthinkable price point, impacting both our homes and businesses. As in the case of smartphones, the real value is in the apps sitting in the app store, which will be built by a new generation of startups and creators. I analyse where we stand and I predict that we are just a few years away from seeing such a product go live. Manipulators State of the Art in Brief When thinking about manipulators or robotic arms, the first thing that pops to your mind is probably an industrial robot, as the one in the picture: These robots are precise, fast, durable and capable of lifting heavy weights. They are also very dangerous, therefore they need to be fenced and kept separate from human workers. A more recent development is the introduction of collaborative robots or cobots. These robots are generally slower than industrial robots, but they are safe to work in direct contact with humans thanks to sensors and algorithms to detect collisions and stop the robot motion just in time, enabling many new use-cases. The market leader is Universal Robotics, a Danish company which recently sold its robot number 50.000. In 2021 the cheapest UR cobot can be purchased for about 20.000 dollars, but there are more affordable competitors which are sold for less than 10.000 dollars. The future looks bright for collaborative robots and for us consumers, since the price is expected to keep going down quickly following Wright’s Law, that is the price should be reduced by a fixed percentage every times the number of units sold is doubled. Keep in mind that UR sold its robot number 25.000 in 2,018 so it doubled the number of all-time robots sold in just two years! Still, if you go through the tech specs of these arms you will start noticing something, see here and here. Current robotic arms are overqualified for many of the mundane tasks we would like them to do, for instance in our kitchens and homes. Seriously, look at the UR5, when was the last time you loaded a dishwasher with 0.1 millimeter precision? Or which kitchen appliance did you use for 35.000 hours at full capacity? Or what clothes weigh 5+ kilograms? On the other side of the tech specs there is a market for toy robots and desktop robots, which are inexpensive, but unfortunately suffer from limited arm reach, payload and overall reliability. Can a hybrid be built? I think so, and it may very well be the killer product of robotics. What does the iPhone of Robotics look like? The killer product of robotics may be a collaborative robot which is intuitive to use and low cost, by making trade offs with speed and accuracy. Drawing from my research, this is how I believe it will look like: Must Have: Low cost: Less than 1,000 USD for the robot hardware. Compatible with a wide set of end effectors, to cover multiple use cases. Lightweight and Compliant: Able to interact safely in environments with untrained staff and children, easy to physically carry around. Self-contained and Portable: it includes a computing platform, with a form factor similar to a Raspberry pi or Jetson Nano and a standard networking stack (Wifi, Bluetooth). It should be easy to deploy third party apps on it. Nice to Have: 6 Degrees of Freedom (while 7 DOF gives more flexibility, 6 DOF can already reach any position in the workspace, but are easier to control and more affordable) Reach 700mm Payload 2Kg Repeatability 1mm Max Speed 0.5 m/s Operating Lifetime 10,000 hours at full capacity. Open Source and ROS compatible Payload and Reach are very use cases dependent. If I had to pick a sweet spot for a general purpose robot, I would say 3kg and 750mm. One of the crucial points above is the possibility to install third party apps. Today, robotic companies are forced to curate the whole software-hardware stack to deliver a product, so the company building the robot behaviours (say the algorithms to control the stirring movements of an omelette-making robot) are also the companies selling the robotic hardware to the end customer. Sometimes they are also the companies building the hardware, even if it’s more common to see companies buying hardware off the shelf, say a UR cobot, and customising it. On top of this, today the same companies also build the user interface, say a mobile app, to actually control the robot. When a popular general purpose cobot will be available on the market, there will start to be a clear division between the company selling the general purpose hardware (and the onboard app-store) and the companies building apps for it, that is the skills of the cobot. Translated into the smartphone dictionary, the robot and app store store manufacturer is Apple, while the app developers are Facebook, Netflix, etc. To further stress the analogy, you may think of your smartphone as a very limited robot, with capabilities such as making sounds and coloring the screen, but with no movement capabilities (apart from vibrations!). A cobot will have multiple apps installed, exactly as our smartphones, and will be able to perform different tasks as programmed by domain experts in each use case. These domain experts will be 100% software-based robotic companies. It is in this period that we will see an exponential growth in cobot capabilities, as companies can iterate quickly in software. To sustain this demand of new use cases, companies will mainly take approaches based on computer vision, supervised learning and reinforcement learning. In the very long term, I see a further division between robotic companies building the low-level APIs to control the robot and companies specialising in building the user interface to interact with the robot, which will include mobile apps, vocal and gesture commands. In the figure: Evolution of the robotic ecosystem over time (from 2026+ on, with robot manufacturers I’m only referring to the manufacturers of cobots allowing third party apps). The years indicate approximately the predicted first time we will see these models hitting the market. What will we use these collaborative robots for? Pretty much everything. Let’s do a quick calculation to see why I say this. Let’s assume that the apps will have a subscription fee comparable to today’s smartphone apps, say the approximately 20 USD of Nextlix monthly subscription. By the way, given the success of the freemium model for mobile apps, I would not be surprised to see free to use robot skills. Also let’s say that each cobot will last about 3 years. This is much less than what a cobot could last, but it’s in line with the replacement cycle of our high end gadgets such as smartphones (we should strive to do better, the environment would be thankful). As a final assumption, let’s add another 30 USD monthly for a subscription package that includes robot maintenance and support plus the cost of electricity. Over 3 years, that’s about 50 × 36 + 1,000 = 3,000 USD to buy the robot and use it with only 1 app, or 3 USD every day. Let’s take as a reference the lowest possible minimum wage in London, the one for apprentices, which is about 5 USD per hour. This means that for the robot investment to breakeven, it’s enough to have the robot automate just 36 minutes worth of tasks a day! With the 2022 15 USD minimum wage in California, this goes down to 12 minutes! Personally I’m very excited by the role that they will play in removing repetitive tasks in our kitchens, lowering the cost of preparing food and increasing consistency without compromising on food quality. The first deployments will be in commercial kitchens, including dark kitchens, cafeterias and restaurants, and then they will start entering the home kitchen market. For commercial kitchens this will be the second wave of robotics, as more expensive collaborative robots are starting to enter kitchens as we speak, given that their economics are viable today. I may cover this topic in a future blog post. Inside our homes they will take on tasks like washing machine load/unloading, clothes folding, laundries and overall home clean-up. There are multiple ways for manipulators to be able to move to different places, including being mounted on mobile bases and rails, but I believe we will see very low tech solutions get traction first: small fixed bases where the robot can clamp and get power, after being physically moved there by the owner (a “robot charger”?). Other areas that will be impacted by low cost robots include areas with repetitive manual actions like laboratories, hospitals, agriculture, packaging, light manufacturing and remote areas which require infrequent manual intervention. We will also see completely novel applications in the area of communication (“physical” zoom calls!?), remote work and entertainment. I want to stress that all these use cases are very price sensitive, so it’s really the ability of robot manufacturers to offer very low cost that will enable this ecosystem to start blossoming. I see the low cost coming from four main sources: Lowering of all the specs that makes current collaborative robots overqualified. The decoupling of selling hardware vs selling the final use case. New revenue streams: app-store fees, data-mining, new advertisement channels. Robotic-as-a-Service business models, that is other revenue streams in the form of support, maintenance, upselling. Where we stand and what to expect next Let’s start from the hardware, how far are we all from good-enough specs at a good price point? There is work to do, but we are getting there. This is an early stage prototype that we built last year at Nyrvan (in 2 weeks, we didn’t tune the PID parameters much, which resulted in quite some shaking!) The 6 DOF cobot in the video could perform basic kitchen tasks, with a 1kg payload. We used carbon fiber tubes for the links, we 3D printed the joints structure in PLA (probably something with higher heat resistance would have been better), used an Arduino as computing unit and a 48V power supply. All these components can be bought cheaply on Amazon. The lion share of the bill of materials is taken by the actuators. We have chosen the servo brushless motors from Gyems model RMD-X7, RMD-X8 and RMD-X8 PRO, for about 2000 USD in total. Another option that we considered was T-Robot. In total we spent less than 2,500 USD to assemble a cobot, buying all the components at retail price. Actually bulk discounts are consistent for these robotic components, going up to 40%. As building hardware is not our focus, this was simply an excellent exercise in probing the future of robotics. During my research, I have discovered interesting projects and startups which look well equipped to offer or inspire these robotic platforms in the future. I’m going to cover a few of those here, but I’m surely missing many good ones. Please let me know about other promising projects in the comments! Project Blue from Berkeley’s Robot Learning Lab developed a Quasi-Direct Drive for Low-Cost Compliant Robotic Manipulation, which can be used for daily tasks such as folding clothes. They also included a manufacturing BoM estimate in the paper, in which they show they can bring the cost of the robot arm down to 1,250 USD, assuming 10.000 units sold. Elephant Robotics is selling myCobot which introduces the very interesting concept of selling small cobots with an integrated computing unit (a Raspberry Pi). The cobots can integrate with ROS and overall they tick many of the boxes of an iPhone of robotics. Innfos is another Chinese company, with a very interesting modular robotic arm, with amazing specs for less than 1,000 USD. Unfortunately, after a successful kickstarter campaign, they had internal issues and had to close the company. A shout out also to Skyentific 0 which runs a super thoughtful youtube channel on robotics. I spoke with him some time ago, he is really a cool guy and he is spreading a lot of knowledge on low cost robotic arms. Overall the area is still very niche and projects often have troubles going from early stage to growth stage: it’s hard to get funded, since there is a strong bias against hardware-heavy projects by angel investors and venture capitalists. Part of the problem is also due to the lack of demand, since in the past it was very hard to program collaborative robots and therefore hard and expensive to build actual products out of collaborative robots. Now, I would not say that today it’s easy to build robotic software (very often the best backend/frontend/devops practices are ignored in robotics, in which a good share of developers comes from an academic or mechanical/electronics background), but things are getting way more standardised and scalable thanks to standards like the Robotic Operating System and large pretrained computer vision models. Advancement in AI models applied to control and manipulation are also opening up dynamic use-cases like pick-and-place of arbitrary objects. Regarding a proper app store, it’s a chicken-egg issue, we will not see it until an affordable general-purpose cobot platform pops up. Also today there is a very small number of developers able to program these robots and they are employed by the companies selling vertically the use-case. So, what to expect next? We can expect the cost of the hardware to keep going down in the next few years, thanks to the increasing sales and the improving state of the software development cost. Tech directions to lower the cost of the cobots hardware also include: Using modular designs, therefore cutting down the cost of each degree of freedom thanks to economies of scale. Using gearless motors and other last gen motors to get rid of expensive force-torque sensors, brakes and harmonic gears. Using vision based control, to ease inverse kinematics and reduce the cost of position encoders and sensors. In terms of geography, all indications point to Chinese companies taking the lead in terms of development, at least regarding the hardware platform. The software situation is much more distributed. I think it would be nice to conclude this blogpost with an actual prediction, so here we go: If I had to guess the exact timeline, I would say that we will see a collaborative robot with the specs and price-point written above announced by the end of 2,026 so 5 years. I believe shortly after, say 1–2 years, we will see the first ecosystem approaches and the first cobots shipping with an app-store. What do you think? Let me know in the comments!

China’s new rules on auto data require car companies to store important data locally.

Cars today offer high-tech features and gather troves of data to train algorithms. As China steps up controls over new technologies, WSJ looks at the risks for Tesla and other global brands that are now required to keep data within the country. Screenshot: Tesla China.

More from the Wall Street Journal:

Visit WSJ.com: http://www.wsj.com.

Visit the WSJ Video Center: https://wsj.com/video.

On Facebook: https://www.facebook.com/pg/wsj/videos/

On Twitter: https://twitter.com/WSJ

On Snapchat: https://on.wsj.com/2ratjSM

#WSJ #China #Tesla

Quantum physics is directly linked to consciousness: Observations not just change what is measured, they create it… Here’s the next episode of my new documentary Consciousness: Evolution of the Mind (2021), Part II: CONSCIOUSNESS & INFORMATION

*Subscribe to our YT channel to watch the rest of documentary (to be released in parts): https://youtube.com/c/EcstadelicMedia.

**Watch the documentary in its entirety on Vimeo ($0.99/rent; $1.99/buy): https://vimeo.com/ondemand/339083

***Join Consciousness: Evolution of the Mind public forum for news and discussions (Facebook group of 6K+ members): https://www.facebook.com/groups/consciousness.evolution.mind.

#Consciousness #Evolution #Mind #Documentary #Film

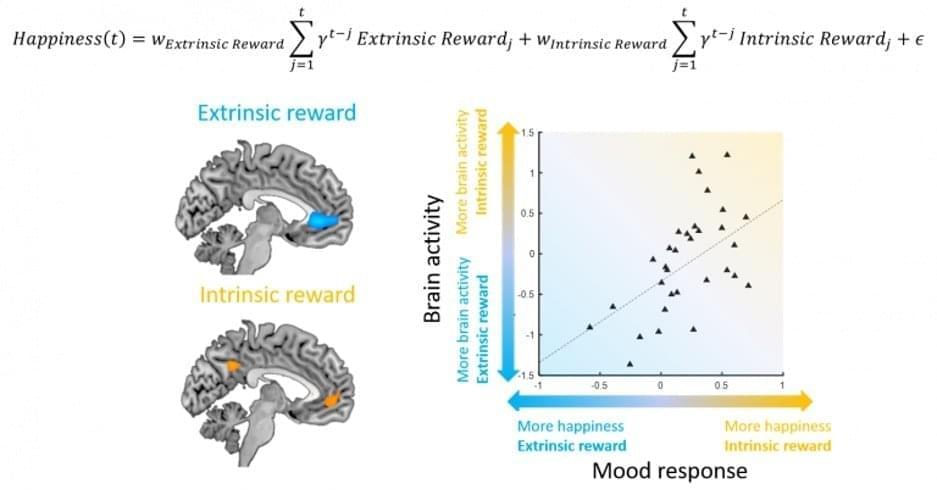

“Our mathematical equation lets us predict which individuals will have both more happiness and more brain activity for intrinsic compared to extrinsic rewards. The same approach can be used in principle to measure what people actually prefer without asking them explicitly, but simply by measuring their mood.”

Summary: A new mathematical equation predicts which individuals will have more happiness and increased brain activity for intrinsic rather than extrinsic rewards. The approach can be used to predict personal preferences based on mood and without asking the individual.

Source: UCL

A new study led by researchers at the Wellcome Centre for Human Neuroimaging shows that using mathematical equations with continuous mood sampling may be better at assessing what people prefer over asking them directly.

People can struggle to accurately assess how they feel about something, especially something they feel social pressure to enjoy, like waking up early for a yoga class.