Two novel demonstrations bring the backbone of the quantum internet, quantum repeaters, a little closer.

Early Tuesday morning, large portions of the web sputtered out for about an hour. The downed sites shared no obvious theme or geography; the outages were global, and they hit everything from Reddit to Spotify to The New York Times. (And yes, also WIRED.) In fact, the only thing they have in common is Fastly, a content-delivery network (CDN) provider whose predawn hiccup reverberated across the internet.

You may not have heard of Fastly, but you likely interact with it in some fashion every time you go online. Along with Cloudflare and Akamai, it’s one of the biggest CDN providers in the world. And while Fastly has been vague about what specific glitch caused Tuesday’s worldwide disruptions, the incident offers a stark reminder of how fragile and interconnected internet infrastructure can be, especially when so much of it hinges on a handful of companies that operate largely outside of public awareness.

Hundreds of websites worldwide crashed this morning following a massive internet outage – with the UK government, Amazon and Spotify among those experiencing issues.

Millions of users across the globe reported problems trying to access web pages, with Netflix, Twitch and news websites including the BBC, Guardian, CNN and the New York Times hit by the problem.

Passengers desperately trying to fill out locator forms on UK.Gov to enter the UK from Portugal and abroad were also affected by the outage.

Don’t worry you haven’t stumbled onto that strange part of the internet again, but it is true that we never truly did sequence the entire Human genome. For you see what was completed in June 2000 was the so called ‘first draft’, which constituted roughly 92% of genome. The problem with the remaining 8% was that these were genomic ‘dead zones’, made up of vast regions of repeating patterns of nucleotide bases that made studying these regions of the genome effectively impossible with the technology that was available at the time.

However, recent breakthroughs in high throughput nanopore sequencing technology have allowed for these so call dead zones to be sequences. Analysing these zone revealed 80 different genes which had been missed during the initial draft of the Human genome. Admittedly this is not many considering that the other 92% of the genome contain 19889 genes, but it may turn out that these genes hold great significance, as there are still many biological pathways which we do not fully understand. It is likely that many of these genes will soon be linked with what are known as orphan enzymes, which are proteins that are created from an unidentified gene, which is turn opens up the door to studying these enzymes more closely via controlling their expression.

So how does this discovery effect the field of regenerative medicine? Well the discovery of these hidden genes is potentially very significant for our general understand of Human biology, which in turn is important for our understanding of how we might go about fixing issues which arise. Possibly more important that the discovery of these hidden genes, is the milestone this sequencing represents in our ability to study our genomes quickly and efficiently with an all-inclusive approach. The vast amount of data that will soon be produced via full genome analysis will go a long way towards understanding the role that genetics play in keeping our bodies healthy, which in turn will allow us to replicate and improve upon natural regenerative and repair mechanisms. It might even allow us to come up with some novel approaches which have no basis in nature.

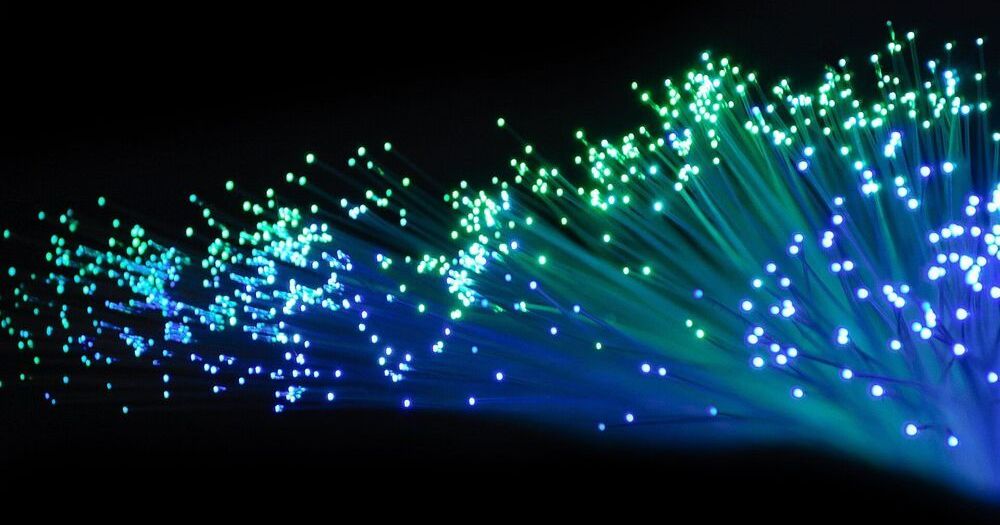

With the massive proliferation of data-heavy services, including high-resolution video streaming and conferencing, cloud services infrastructure growth in 2021 is expected to reach a 27% CAGR. Consequently, while 400 gigabit ethernet (GbE) is currently enjoying widespread deployment, 800 GbE is poised to rapidly follow to address these bandwidth demands.

One approach to 800 GbE is to install eight 100 gigabit per second (Gbps) optical interfaces or lanes. As an alternative to reduce the hardware count, increase reliability, and lower cost, a team of researchers at Lumentum developed an optical solution that uses four 200 Gbps wavelength lanes to reach 800 GbE.

Syunya Yamauchi, a principal optical engineer at Lumentum, will present the optimized design during a session at the Optical Fiber Communication Conference and Exhibition (OFC), being held virtually from 06–11 June, 2021.

TAMPA, Fla. — Satellite operators have cleared a portion of C-band in a key step toward giving the spectrum to U.S. wireless companies in December.

Work has now started on installing filters on ground antennas across the United States, so wireless operators can use the lower 120 MHz of C-band for 5G without interfering with satellite broadcast customers.

Intelsat and SES, the satellite operators with the largest share of the 500 MHz C-band in the U.S., will get more than $2 billion from the Federal Communications Commission (FCC) if they can hand over the 120 MHz swath of frequencies by Dec. 5.

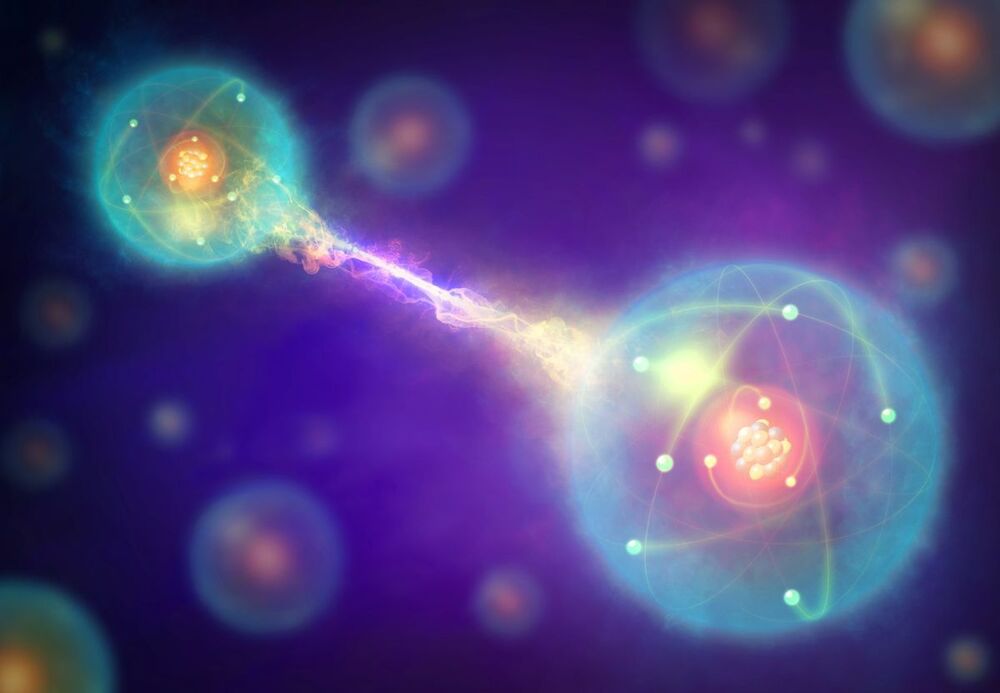

For that, they will need the quantum equivalent of optical repeaters, the components of today’s telecommunications networks that keep light signals strong across thousands of kilometers of optical fiber. Several teams have already demonstrated key elements of quantum repeaters and say they’re well on their way to building extended networks. “We’ve solved all the scientific problems,” says Mikhail Lukin, a physicist at Harvard University. “I’m extremely optimistic that on the scale of 5 to 10 years… we’ll have continental-scale network prototypes.”

Advance could precisely link telescopes, yield hypersecure banking and elections, and make quantum computing possible from anywhere.