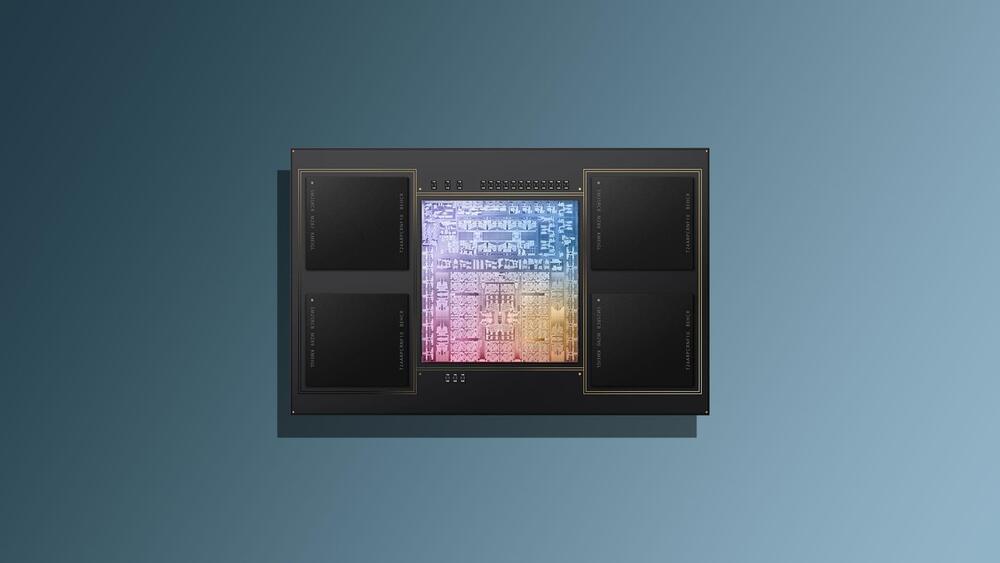

Legendary designer Jony Ive and OpenAI’s Sam Altman are enlisting an Apple Inc. veteran to work on a new artificial intelligence hardware project, aiming to create devices with the latest capabilities.

As part of the effort, outgoing Apple executive Tang Tan will join Ive’s design firm LoveFrom, which will shape the look and capabilities of the new products, according to people familiar with the matter. Altman, an executive who has become the face of modern AI, plans to provide the software underpinnings, said the people, who asked not to be identified because the endeavor isn’t public.

The work marks one of the most ambitious efforts undertaken by Ive since he left Apple in 2019 to create LoveFrom. The iconic designer is famous for the products he helped devise under Apple co-founder Steve Jobs, including the iMac, iPhone and iPad. His hope is to turn the AI device work into a new company, but development of the products remains at an early stage, according to the people. The efforts so far are focused on hiring talent and creating concepts.