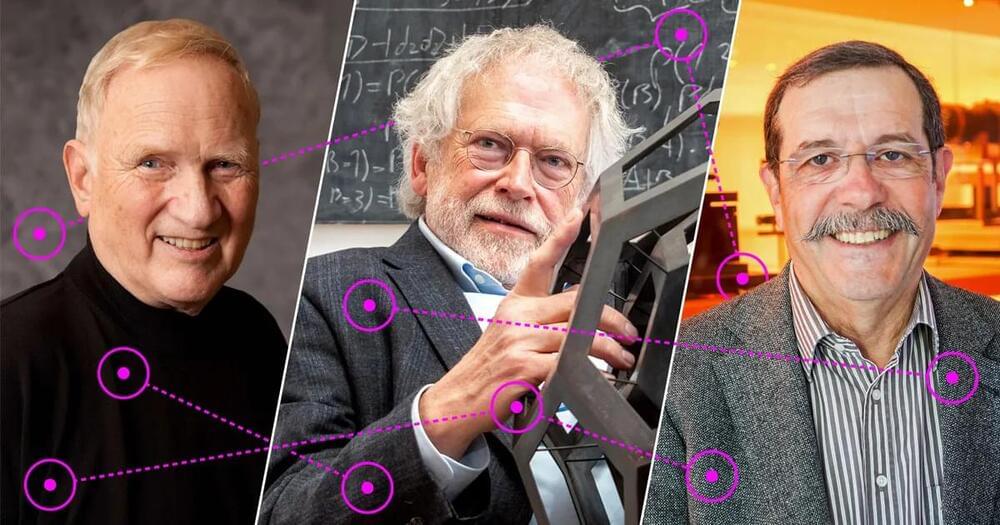

In recent years, a group of Hungarian researchers have made headlines with a bold claim. They say they’ve discovered a new particle — dubbed X17 — that requires the existence of a fifth force of nature.

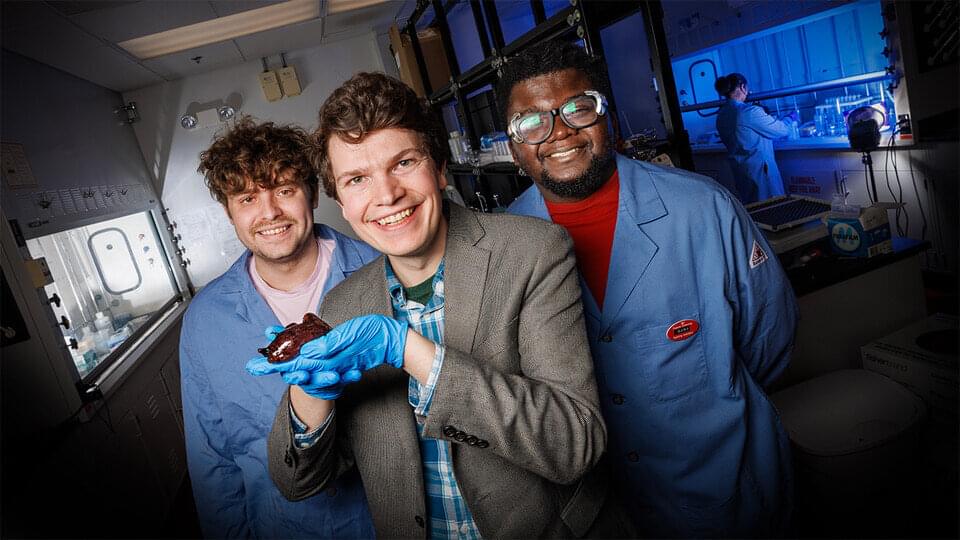

The researchers weren’t looking for the new particle, though. Instead, it popped up as an anomaly in their detector back in 2015 while they were searching for signs of dark matter. The oddity didn’t draw much attention at first. But eventually, a group of prominent particle physicists working at the University of California, Irvine, took a closer look and suggested that the Hungarians had stumbled onto a new type of particle — one that implies an entirely new force of nature.

Then, in late 2019, the Hungarian find hit the mainstream — including a story featured prominently on CNN — when they released new results suggesting that their signal hadn’t gone away. The anomaly persisted even after they changed the parameters of their experiment. They’ve now seen it pop up in the same way hundreds of times.