May 18, 2016

Space exploration will spur transhumanism and mitigate existential risk

Posted by Zoltan Istvan in categories: alien life, cyborgs, existential risks, geopolitics, policy, robotics/AI, solar power, space travel, sustainability, transhumanism

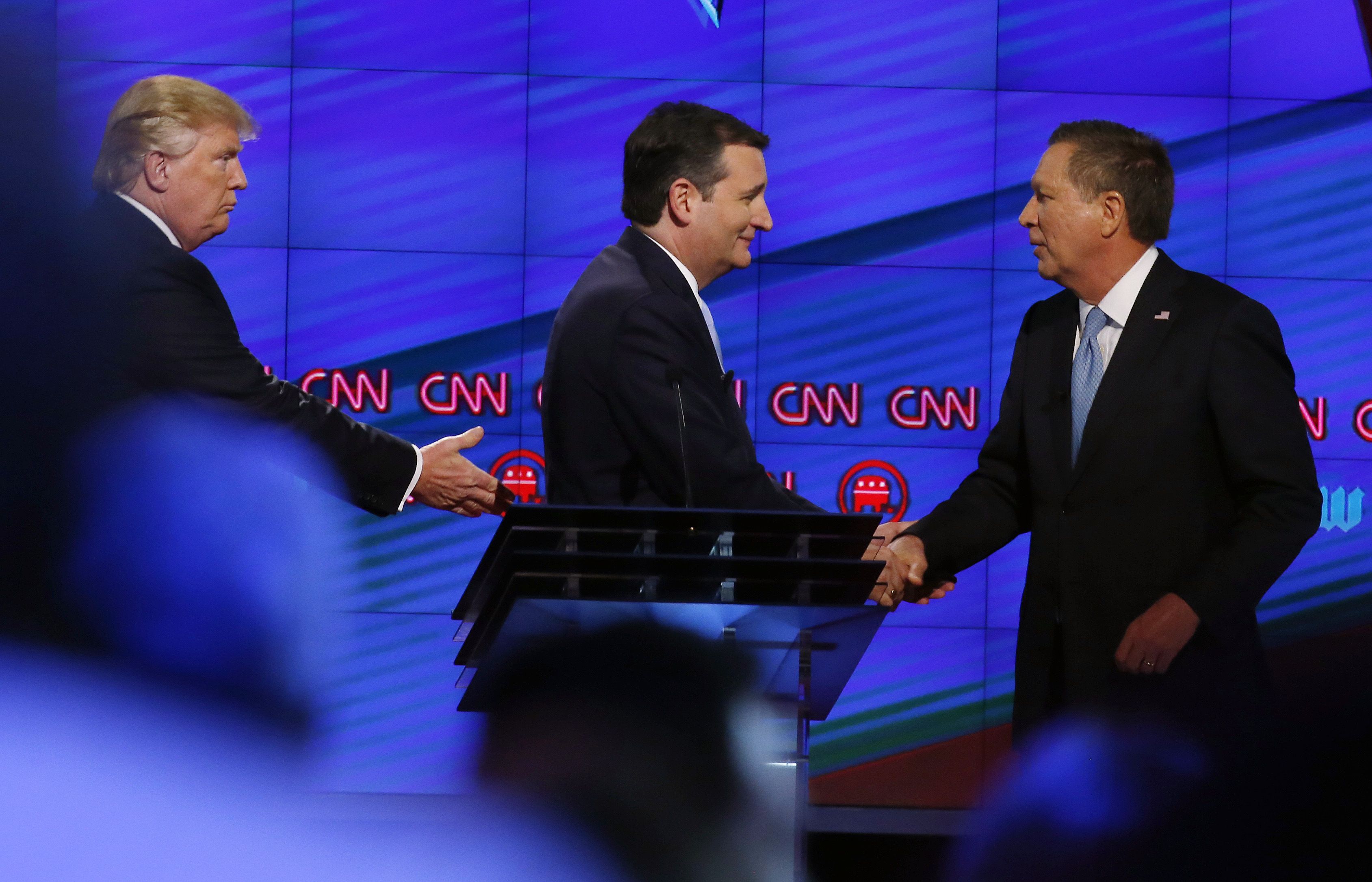

Friends have been asking me to write something on space exploration and my campaign policy on it, so here it is just out on TechCrunch:

When people think about rocket ships and space exploration, they often imagine traveling across the Milky Way, landing on mysterious planets and even meeting alien life forms.

In reality, humans’ drive to get off Planet Earth has led to tremendous technological advances in our mundane daily lives — ones we use right here at home on terra firma.

Continue reading “Space exploration will spur transhumanism and mitigate existential risk” »

«— This is money. It is not a promissory note, a metaphor, an analogy or an abstract representation of money in some account. It is the money itself. Unlike your national currency, it does not require an underlying asset or redemption guarantee.

«— This is money. It is not a promissory note, a metaphor, an analogy or an abstract representation of money in some account. It is the money itself. Unlike your national currency, it does not require an underlying asset or redemption guarantee.