Jun 5, 2024

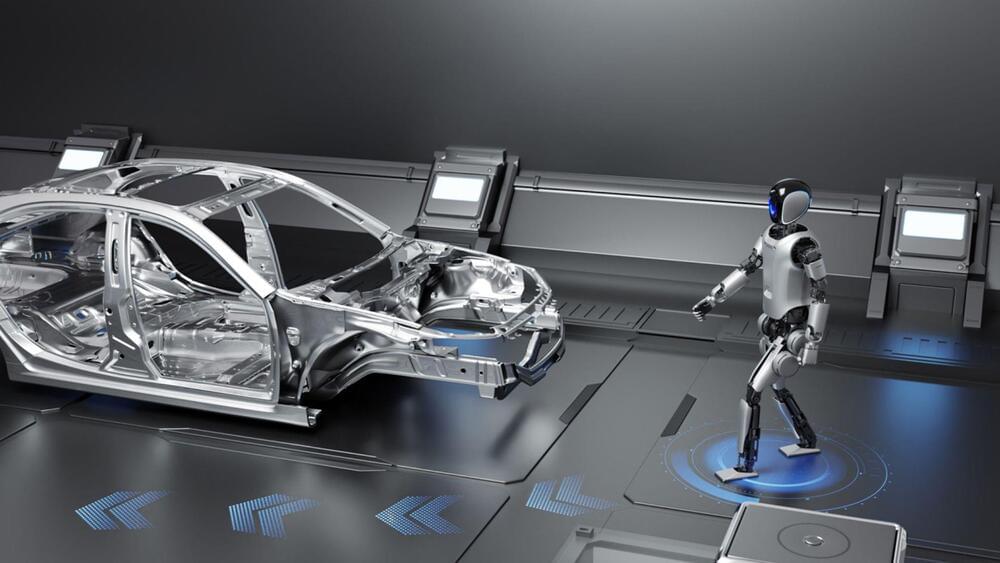

China’s humanoid robots to tackle tricky car chores at Dongfeng Motor

Posted by Gemechu Taye in categories: robotics/AI, transportation

Chinese state-owned automaker Dongfeng Motor is partnering with robotics firm UBTech to introduce the latter’s humanoid into its manufacturing process.

The industrial version of the Walker S humanoid robot from Ubtech will be used on the production line of Dongfeng Motor to carry out various manufacturing duties.

Continue reading “China’s humanoid robots to tackle tricky car chores at Dongfeng Motor” »

עברית (Hebrew)

עברית (Hebrew)