Artificial intelligence (AI) once seemed like a fantastical construct of science fiction, enabling characters to deploy spacecraft to neighboring galaxies with a casual command. Humanoid AIs even served as companions to otherwise lonely characters. Now, in the very real 21st century, AI is becoming part of everyday life, with tools like chatbots available and useful for everyday tasks like answering questions, improving writing, and solving mathematical equations.

AI does, however, have the potential to revolutionize scientific research —in ways that can feel like science fiction but are within reach.

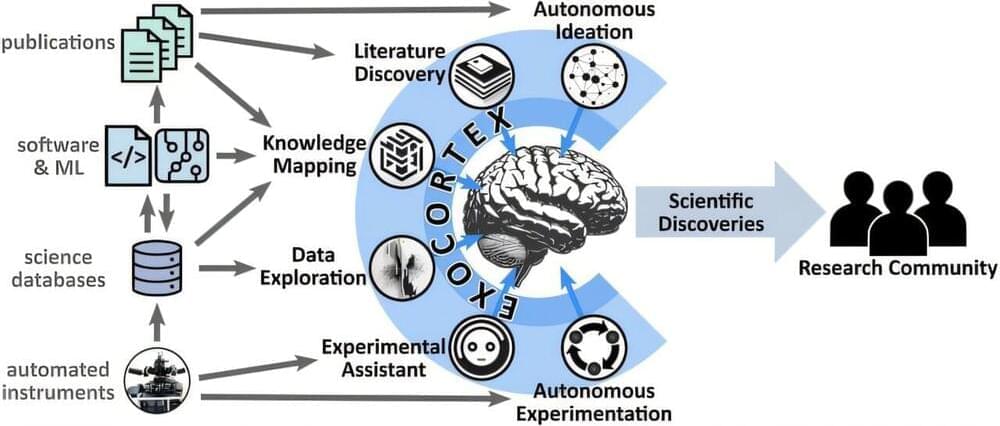

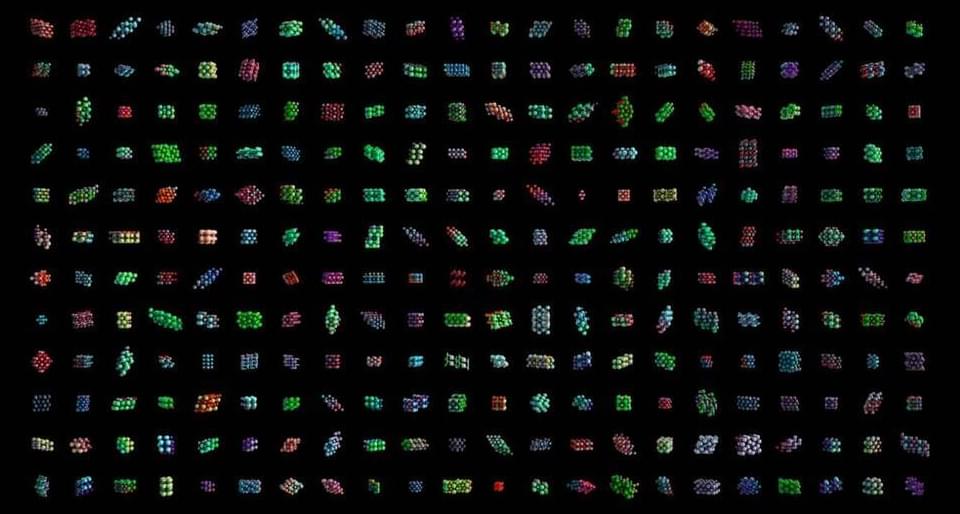

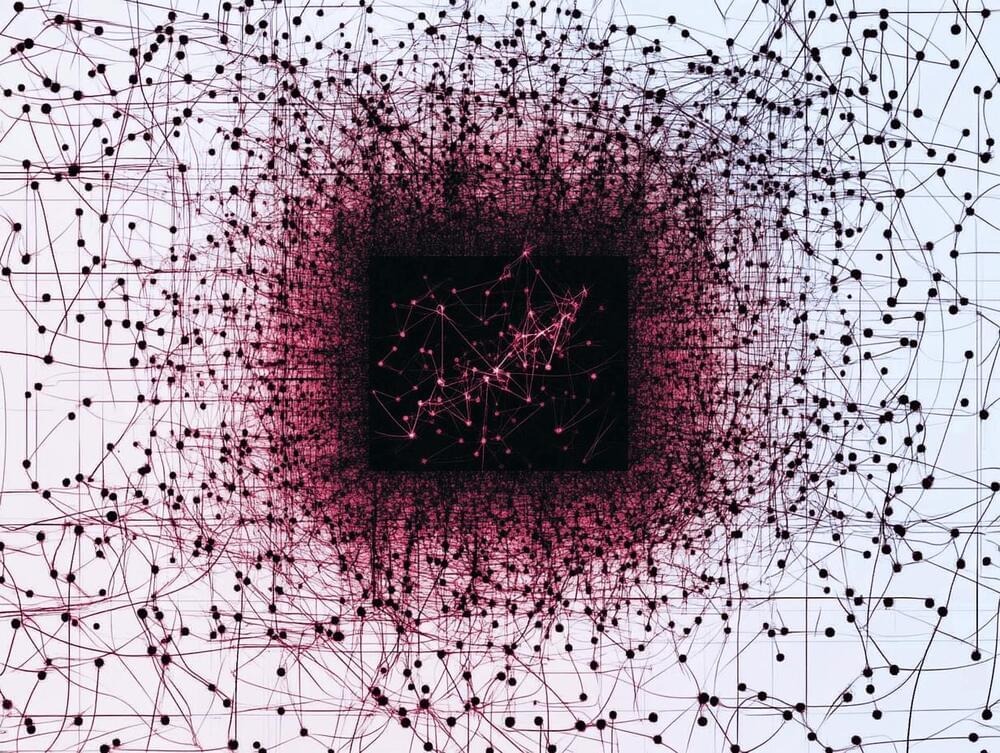

At the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory, scientists are already using AI to automate experiments and discover new materials. They’re even designing an AI scientific companion that communicates in ordinary language and helps conduct experiments. Kevin Yager, the Electronic Nanomaterials Group leader at the Center for Functional Nanomaterials (CFN), has articulated an overarching vision for the role of AI in scientific research.