Jun 24, 2010

Singularity Summit 2010 in San Francisco to Explore Intelligence Augmentation

Posted by Tom McCabe in category: robotics/AI

Continue reading “Singularity Summit 2010 in San Francisco to Explore Intelligence Augmentation” »

Continue reading “Singularity Summit 2010 in San Francisco to Explore Intelligence Augmentation” »

In the lunch time I am existing virtually in the hall of the summit as a face on the Skype account — i didn’t get a visa and stay in Moscow. But ironically my situation is resembling what I an speaking about: about the risk of remote AI which is created by aliens million light years from Earth and sent via radio signals. The main difference is that they communicate one way, and I have duplex mode.

This is my video presentation on YouTube:

Risks of SETI, for Humanity+ 2010 summit

Tags: extinction, h+, SETI, summit

We can only see a short distance ahead, but we can see plenty there that needs to be done.

—Alan Turing

As a programmer, I look at events like the H+ Conference this weekend in a particular way. I see all of their problems as software: not just the code for AI and friendly AI, but also that for DNA manipulation. It seems that the biggest challenge for the futurist movement is to focus less on writing English and more on getting the programmers working together productively.

I start the AI chapter of my book with the following question: Imagine 1,000 people, broken up into groups of five, working on two hundred separate encyclopedias, versus that same number of people working on one encyclopedia? Which one will be the best? This sounds like a silly analogy when described in the context of an encyclopedia, but it is exactly what is going on in artificial intelligence (AI) research today.

Today, the research community has not adopted free software and shared codebases sufficiently. For example, I believe there are more than enough PhDs today working on computer vision, but there are 200+ different codebases plus countless proprietary ones. Simply put, there is no computer vision codebase with critical mass.

Continue reading “H+ Conference and the Singularity Faster” »

Friendly AI: What is it, and how can we foster it?

By Frank W. Sudia [1]

Originally written July 20, 2008

Edited and web published June 6, 2009

Copyright © 2008-09, All Rights Reserved.

Keywords: artificial intelligence, artificial intellect, friendly AI, human-robot ethics, science policy.

1. Introduction

Continue reading “Friendly AI: What is it, and how can we foster it?” »

I am a former Microsoft programmer who wrote a book (for a general audience) about the future of software called After the Software Wars. Eric Klien has invited me to post on this blog. Here are several more sections on AI topics. I hope you find these pages food for thought and I appreciate any feedback.

The future is open source everything.

—Linus Torvalds

That knowledge has become the resource, rather than a resource, is what makes our society post-capitalist.

I am a former Microsoft programmer who wrote a book (for a general audience) about the future of software called After the Software Wars. Eric Klien has invited me to post on this blog. Here is my section entitled “Software and the Singularity”. I hope you find this food for thought and I appreciate any feedback.

Futurists talk about the “Singularity”, the time when computational capacity will surpass the capacity of human intelligence. Ray Kurzweil predicts it will happen in 2045. Therefore, according to its proponents, the world will be amazing then.3 The flaw with such a date estimate, other than the fact that they are always prone to extreme error, is that continuous learning is not yet a part of the foundation. Any AI code lives in the fringes of the software stack and is either proprietary or written by small teams of programmers.

I believe the benefits inherent in the singularity will happen as soon as our software becomes “smart” and we don’t need to wait for any further Moore’s law progress for that to happen. Computers today can do billions of operations per second, like add 123,456,789 and 987,654,321. If you could do that calculation in your head in one second, it would take you 30 years to do the billion that your computer can do in that second.

Even if you don’t think computers have the necessary hardware horsepower today, understand that in many scenarios, the size of the input is the primary driving factor to the processing power required to do the analysis. In image recognition for example, the amount of work required to interpret an image is mostly a function of the size of the image. Each step in the image recognition pipeline, and the processes that take place in our brain, dramatically reduce the amount of data from the previous step. At the beginning of the analysis might be a one million pixel image, requiring 3 million bytes of memory. At the end of the analysis is the data that you are looking at your house, a concept that requires only 10s of bytes to represent. The first step, working on the raw image, requires the most processing power, so therefore it is the image resolution (and frame rate) that set the requirements, values that are trivial to change. No one has shown robust vision recognition software running at any speed, on any sized image!

Tag: singularity

With our growing resources, the Lifeboat Foundation has teamed with the Singularity Hub as Media Sponsors for the 2010 Humanity+ Summit. If you have suggestions on future events that we should sponsor, please contact [email protected].

The summer 2010 “Humanity+ @ Harvard — The Rise Of The Citizen Scientist” conference is being held, after the inaugural conference in Los Angeles in December 2009, on the East Coast, at Harvard University’s prestigious Science Hall on June 12–13. Futurist, inventor, and author of the NYT bestselling book “The Singularity Is Near”, Ray Kurzweil is going to be keynote speaker of the conference.

The summer 2010 “Humanity+ @ Harvard — The Rise Of The Citizen Scientist” conference is being held, after the inaugural conference in Los Angeles in December 2009, on the East Coast, at Harvard University’s prestigious Science Hall on June 12–13. Futurist, inventor, and author of the NYT bestselling book “The Singularity Is Near”, Ray Kurzweil is going to be keynote speaker of the conference.

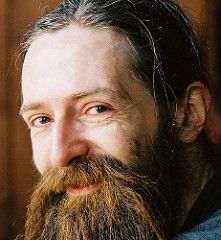

Also speaking at the H+ Summit @ Harvard is Aubrey de Grey, a biomedical gerontologist based in Cambridge, UK, and is the Chief Science Officer of SENS Foundation, a California-based charity dedicated to combating the aging process. His talk, “Hype and anti-hype in academic biogerontology research: a call to action”, will analyze the interplay of over-pessimistic and over-optimistic positions with regards of research and development of cures, and propose solutions to alleviate the negative effects of both.

Also speaking at the H+ Summit @ Harvard is Aubrey de Grey, a biomedical gerontologist based in Cambridge, UK, and is the Chief Science Officer of SENS Foundation, a California-based charity dedicated to combating the aging process. His talk, “Hype and anti-hype in academic biogerontology research: a call to action”, will analyze the interplay of over-pessimistic and over-optimistic positions with regards of research and development of cures, and propose solutions to alleviate the negative effects of both.

Tags: biotech, conference, culture, health, humanity, research, singularity, sustainability

AI is our best hope for long term survival. If we fail to create it, it will happened by some reason. Here I suggest the complete list of possible causes of failure, but I do not believe in them. (I was inspired bu V.Vinge artile “What if singularity does not happen”?)

I think most of these points are wrong and AI finaly will be created.

Technical reasons:

1) Moore’s Law will stop by physical causes earlier than would be established sufficiently powerful and inexpensive apparatus for artificial intelligence.

2) Silicon processors are less efficient than neurons to create artificial intelligence.

3) Solution of the AI cannot be algorithmically parallelization and as a result of the AI will be extremely slow.

Philosophy:

4) Human beings use some method of processing information, essentially inaccessible to algorithmic computers. So Penrose believes. (But we can use this method using bioengineering techniques.) Generally, the final recognition of the impossibility of creating artificial intelligence would be tantamount to recognizing the existence of the soul.

5) The system cannot create a system more complex then themselves, and so the people cannot create artificial intelligence, since all the proposed solutions are too simple. That is, AI is in principle possible, but people are too stupid to do it. In fact, one reason for past failures in the creation of artificial intelligence is that people underestimate the complexity of the problem.

6) AI is impossible, because any sufficiently complex system reveals the meaninglessness of existence and stops.

7) All possible ways to optimize are exhausted.AI does not have any fundamental advantage in comparison with the human-machine interface and has a limited scope of use.

8. The man in the body has a maximum level of common sense, and any incorporeal AIs are or ineffective, or are the models of people.

9) AI is created, but has no problems, which he could and should be addressed. All the problems have been solved by conventional methods, or proven uncomputable.

10) AI is created, but not capable of recursive self-optimization, since this would require some radically new ideas, but they had not. As a result, AI is there, or as a curiosity, or as a limited specific applications, such as automatic drivers.

11) The idea of artificial intelligence is flawed, because it has no precise definition or even it is an oxymoron, like “artificial natural.” As a result, developing specific goals or to create models of man, but not universal artificial intelligence.

12) There is an upper limit of the complexity of systems for which they have become chaotic and unstable, and it slightly exceeds the intellect of the most intelligent people. AI is slowly coming to this threshold of complexity.

13) The bearer of intelligence is Qualia. For our level of intelligence should be a lot events that are indescribable and not knowable, but superintellect should understand them, by definition, otherwise it is not superintellect, but simply a quick intellect.

A detailed, functional artificial human brain can be built within the next 10 years, a leading scientist has claimed.

It will probably come as a surprise to those who are not well acquainted with the life and work of Alan Turing that in addition to his renowned pioneering work in computer science and mathematics, he also helped to lay the groundwork in the field of mathematical biology(1). Why would a renowned mathematician and computer scientist find himself drawn to the biosciences?

Interestingly, it appears that Turing’s fascination with this sub-discipline of biology most probably stemmed from the same source as the one that inspired his better known research: at that time all of these fields of knowledge were in a state of flux and development, and all posed challenging fundamental questions. Furthermore, in each of the three disciplines that engaged his interest, the matters to which he applied his uniquely creative vision were directly connected to central questions underlying these disciplines, and indeed to deeper and broader philosophical questions into the nature of humanity, intelligence and the role played by evolution in shaping who we are and how we shape our world.

Central to Turing’s biological work was his interest in mechanisms that shape the development of form and pattern in autonomous biological systems, and which underlie the patterns we see in nature (2), from animal coat markings to leaf arrangement patterns on plant stems (phyllotaxis). This topic of research, which he named “morphogenesis,” (3) had not been previously studied with modeling tools. This was a knowledge gap that beckoned Turing; particularly as such methods of research came naturally to him.

In addition to the diverse reasons that attracted him to the field of pattern formation, a major ulterior motive for his research had to do with a contentious subject which, astonishingly, is still highly controversial in some countries to this day. In studying pattern formation he was seeking to help invalidate the “argument from design” (4) concept, which we know today as the hypothesis of “Intelligent Design.”

Continue reading “Alan Turing: Biology, Evolution and Artificial Intelligence” »