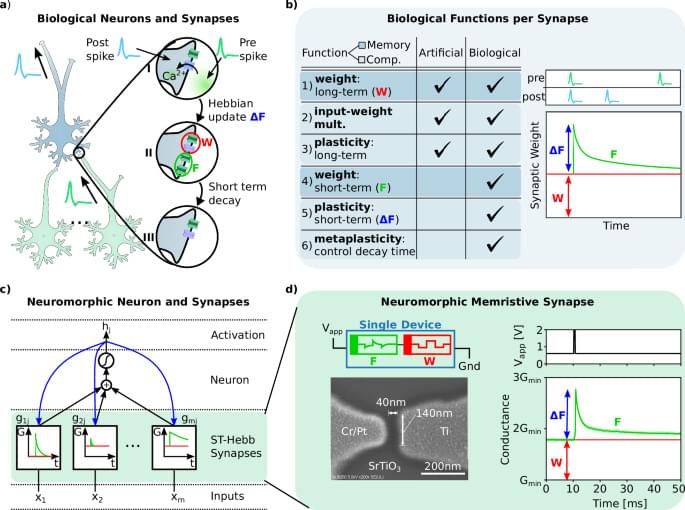

Biological neural networks demonstrate complex memory and plasticity functions. This work proposes a single memristor based on SrTiO3 that emulates six synaptic functions for energy efficient operation. The bio-inspired deep neural network is trained to play Atari Pong, a complex reinforcement learning task in a dynamic environment.

Category: robotics/AI – Page 307

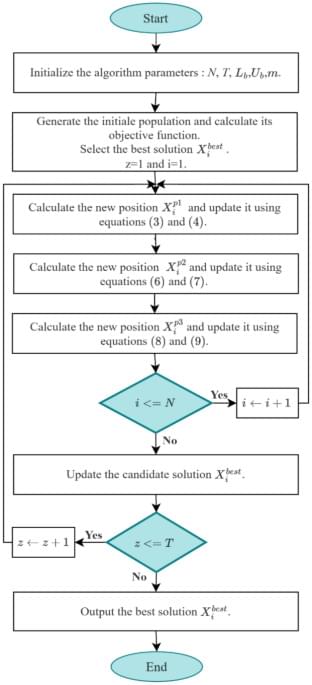

The Driving Training Based Optimization (DTBO) algorithm, proposed by Mohammad Dehghani, is one of the novel metaheuristic algorithms which appeared in 202280. This algorithm is founded on the principle of learning to drive, which unfolds in three phases: selecting an instructor from the learners, receiving instructions from the instructor on driving techniques, and practicing newly learned techniques from the learner to enhance one’s driving abilities81,82. In this work, DTBO algorithm is used, due to its effectiveness, which was confirmed by a comparative study83 with other algorithms, including particle swarm optimization84, Gravitational Search Algorithm (GSA)85, teaching learning-based optimization, Gray Wolf Optimization (GWO)86, Whale Optimization Algorithm (WOA)87, and Reptile Search Algorithm (RSA)88. The comparative study has been done using various kinds of benchmark functions, such as constrained, nonlinear and non-convex functions.

Lyapunov-based Model Predictive Control (LMPC) is a control approach integrating Lyapunov function as constraint in the optimization problem of MPC89,90. This technique characterizes the region of the closed-loop stability, which makes it possible to define the operating conditions that maintain the system stability91,92. Since its appearance, the LMPC method has been utilized extensively for controlling a various nonlinear systems, such as robotic systems93, electrical systems94, chemical processes95, and wind power generation systems90. In contrast to the LMPC, both the regular MPC and the NMPC lack explicit stability restrictions and can’t combine stability guarantees with interpretability, even with their increased flexibility.

The proposed method, named Lyapunov-based neural network model predictive control using metaheuristic optimization approach (LNNMPC-MOA), includes Lyapunov-based constraint in the optimization problem of the neural network model predictive control (NNMPC), which is solved by the DTBO algorithm. The suggested controller consists of two parts: the first is responsible for calculating predictions using a neural network model of the feedforward type, and the second is responsible to resolve the constrained nonlinear optimization problem using the DTBO algorithm. This technique is suggested to solve the nonlinear and non-convex optimization problem of the conventional NMPC, ensure on-line optimization in reasonable time thanks to their easy implementation and guaranty the stability using the Lyapunov function-based constraint. The efficiency of the proposed controller regarding to the accuracy, quickness and robustness is assessed by taking into account the speed control of a three-phase induction motor, and its stability is mathematically ensured using the Lyapunov function-based constraint. The acquired results are compared to those of NNMPC based on DTBO algorithm (NNMPC-DTBO), NNMPC using PSO algorithm (NNMPC-PSO), Fuzzy Logic controller optimized by TLBO (FLC-TLBO) and optimized PID controller using PSO algorithm (PID-PSO)95.

Liquid amounting to a 1-2km-deep ocean may be frozen up to 20km below surface, calculations suggest.

Sakana AI

Posted in humor, robotics/AI

The AI Scientist is designed to be compute efficient. Each idea is implemented and developed into a full paper at a cost of approximately $15 per paper. While there are still occasional flaws in the papers produced by this first version (discussed below and in the report), this cost and the promise the system shows so far illustrate the potential of The AI Scientist to democratize research and significantly accelerate scientific progress.

We believe this work signifies the beginning of a new era in scientific discovery: bringing the transformative benefits of AI agents to the entire research process, including that of AI itself. The AI Scientist takes us closer to a world where endless affordable creativity and innovation can be unleashed on the world’s most challenging problems.

For decades following each major AI advance, it has been common for AI researchers to joke amongst themselves that “now all we need to do is figure out how to make the AI write the papers for us!” Our work demonstrates this idea has gone from a fantastical joke so unrealistic everyone thought it was funny to something that is currently possible.

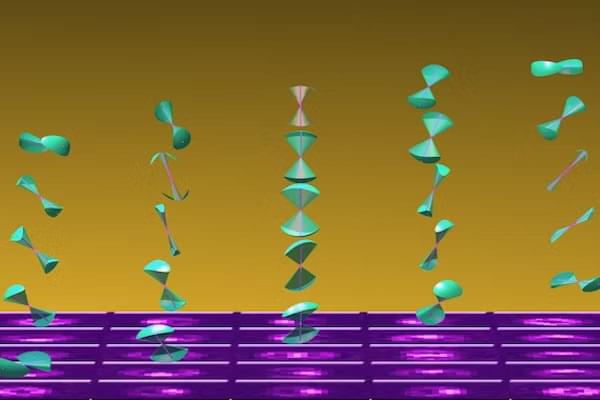

While new technologies, including those powered by artificial intelligence, provide innovative solutions to a steadily growing range of problems, these tools are only as good as the data they’re trained on. In the world of molecular biology, getting high-quality data from tiny biological systems while they’re in motion – a critical step for building next-gen tools – is something like trying to take a clear picture of a spinning propeller. Just as you need precise equipment and conditions to photograph the propeller clearly, researchers need advanced techniques and careful calculations to measure the movement of molecules accurately.

Matthew Lew, associate professor in the Preston M. Green Department of Electrical & Systems Engineering in the McKelvey School of Engineering at Washington University in St. Louis, builds new imaging technologies to unravel the intricate workings of life at the nanoscale. Though they’re incredibly tiny – 1,000 to 100,000 times smaller than a human hair – nanoscale biomolecules like proteins and DNA strands are fundamental to virtually all biological processes.

Scientists rely on ever-advancing microscopy methods to gain insights into these systems work. Traditionally, these methods have relied on simplifying assumptions that overlook some complexities of molecular behavior, which can be wobbly and asymmetric. A new theoretical framework developed by Lew, however, is set to shake up how scientists measure and interpret wobbly molecular motion.

A blockchain entrepreneur, a cinematographer, a polar adventurer and a robotics researcher plan to fly around Earth’s poles aboard a SpaceX Crew Dragon capsule by end of year, becoming the first humans to observe the ice caps and extreme polar environments from orbit, SpaceX announced Monday.

The historic flight, launched from the Kennedy Space Center in Florida, will be commanded by Chun Wang, a wealthy bitcoin pioneer who founded f2pool and stakefish, “which are among the largest Bitcoin mining pools and Ethereum staking providers,” the crew’s website says.

“Wang aims to use the mission to highlight the crew’s explorational spirit, bring a sense of wonder and curiosity to the larger public and highlight how technology can help push the boundaries of exploration of Earth and through the mission’s research,” SpaceX said on its web site.

A newly published study by Sheba Medical Center, Israel’s largest and internationally ranked hospital, shows that AI analysis of medical records as patients are admitted to the ER can accurately identify those at high risk of pulmonary embolism (PE).

A pulmonary embolism is a sudden blockage in an artery in the lung caused by a blood clot, most commonly due to a dislodged clot in the leg. They are normally diagnosed during a CT scan.

Using machine learning, the researchers trained an algorithm to detect a pulmonary embolism before a patient was hospitalized, based on existing medical records.

An automated computational approach to the optical lens design of imaging systems promises to provide optimal solutions without human intervention, slashing the time and cost usually required. The result could be improved cameras for mobile phones with superior quality or new functionality.