Mar 19, 2024

What makes Black Holes Grow and New Stars Form? Machine Learning helps Solve the Mystery

Posted by Natalie Chan in categories: cosmology, robotics/AI

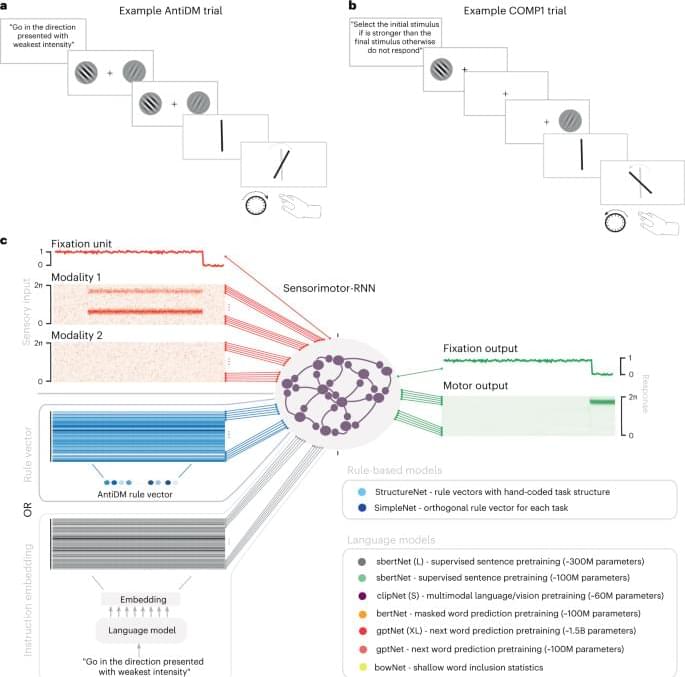

It takes more than a galaxy merger to make a black hole grow and new stars form: machine learning shows cold gas is needed too to initiate rapid growth — new research finds.

When they are active, supermassive black holes play a crucial role in the way galaxies evolve. Until now, growth was thought to be triggered by the violent collision of two galaxies followed by their merger, however new research led by the University of Bath suggests galaxy mergers alone are not enough to fuel a black hole — a reservoir of cold gas at the centre the host galaxy is needed too.

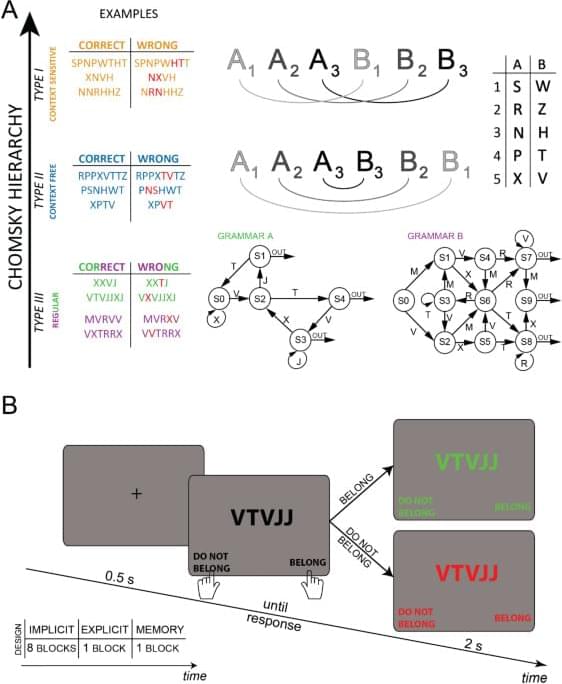

The new study, published this week in the journal Monthly Notices of the Royal Astronomical Society is believed to be the first to use machine learning to classify galaxy mergers with the specific aim of exploring the relationship between galaxy mergers, supermassive black-hole accretion and star formation. Until now, mergers were classified (often incorrectly) through human observation alone.