Mar 22, 2024

Top computer scientists say the future of artificial intelligence is similar to that of Star Trek

Posted by Cecile G. Tamura in categories: futurism, robotics/AI

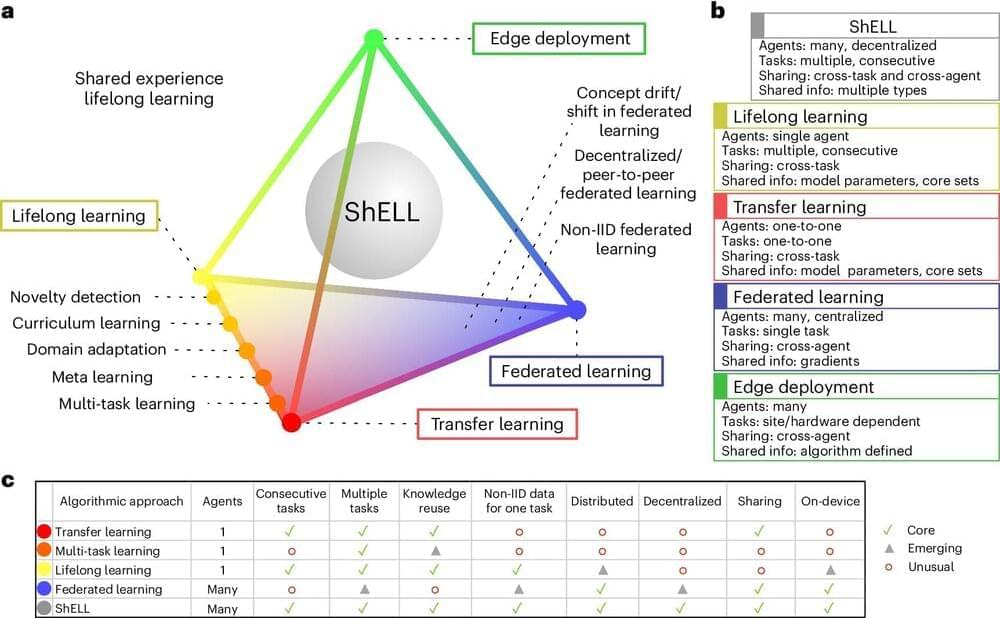

Leading computer scientists from around the world have shared their vision for the future of artificial intelligence—and it resembles the capabilities of Star Trek character “The Borg.”