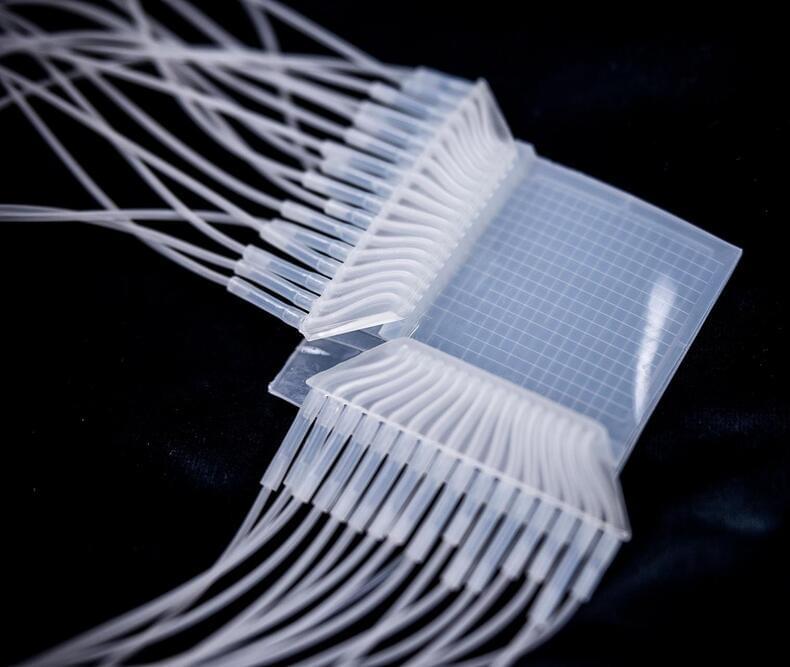

Researchers at Tampere University have created the world’s first soft touchpad capable of detecting the force, area, and location of contact without the need for electricity. This innovative device operates using pneumatic channels, making it suitable for environments like MRI machines and other settings where electronic devices are impractical. The technology could also be advantageous for applications in soft robotics and rehabilitation aids.

Researchers at Tampere University have developed the world’s first soft touchpad that is able to sense the force, area, and location of contact without electricity. That has traditionally required electronic sensors, but the newly developed touchpad does not need electricity as it uses pneumatic channels embedded in the device for detection.

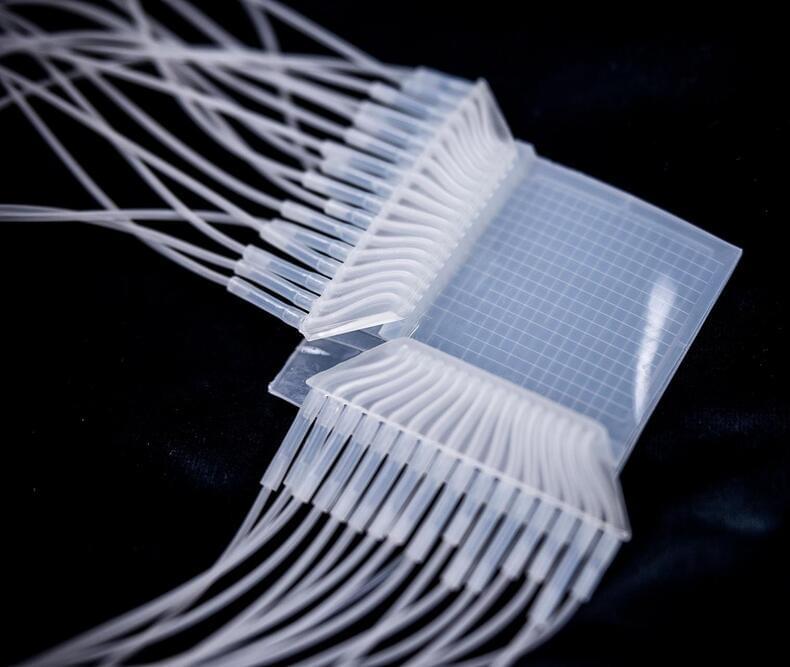

Made entirely of soft silicone, the device contains 32 channels that adapt to touch, each only a few hundred micrometers wide. In addition to detecting the force, area, and location of touch, the device is precise enough to recognize handwritten letters on its surface and it can even distinguish multiple simultaneous touches.