Archive for the ‘robotics/AI’ category: Page 64

Oct 26, 2024

There’s a Humongous Problem With AI Models: They Need to Be Entirely Rebuilt Every Time They’re Updated

Posted by Zola Balazs Bekasi in category: robotics/AI

Each retraining may cost millions of dollars in computation.

New research shows that AI models need to be completely retrained to learn new concepts — which is an expensive problem for AI companies.

Oct 26, 2024

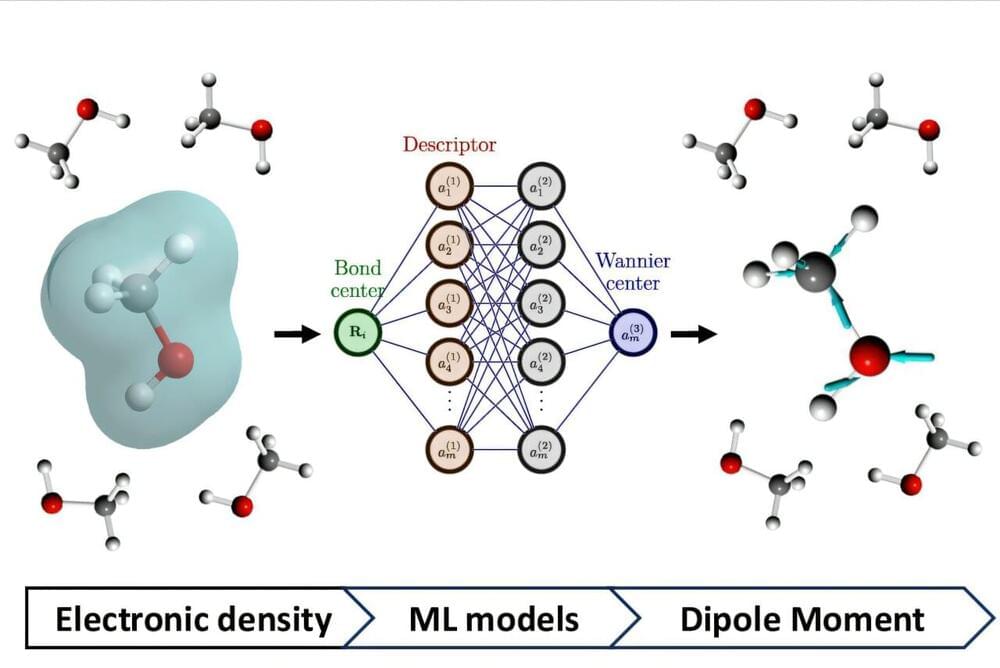

New machine learning model quickly and accurately predicts dielectric function

Posted by Saúl Morales Rodriguéz in categories: materials, robotics/AI

Researchers Tomohito Amano and Shinji Tsuneyuki of the University of Tokyo with Tamio Yamazaki of CURIE (JSR-UTokyo Collaboration Hub) have developed a new machine learning model to predict the dielectric function of materials, rather than calculating from first-principles.

Oct 26, 2024

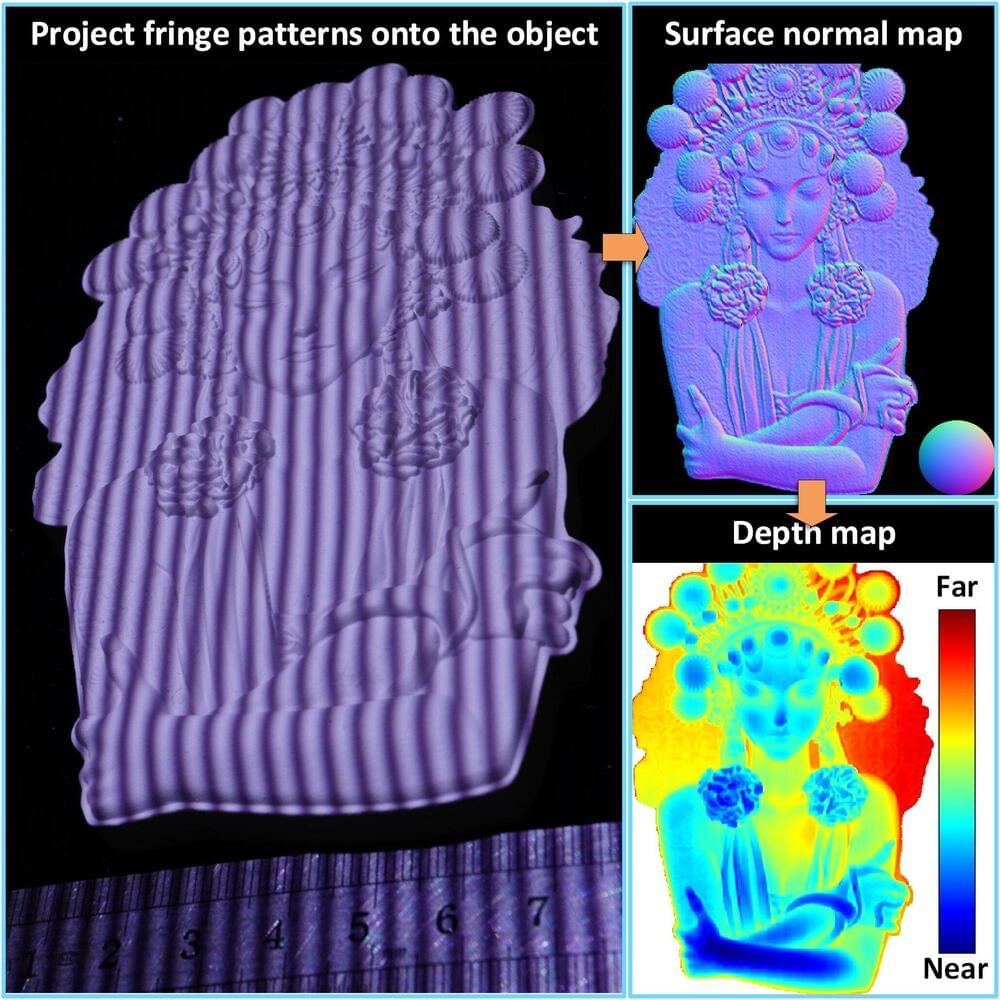

Fringe photometric stereo method improves speed and accuracy of 3D surface measurements

Posted by Saúl Morales Rodriguéz in categories: biotech/medical, robotics/AI

Researchers have developed a faster and more accurate method for acquiring and reconstructing high-quality 3D surface measurements. The approach could greatly improve the speed and accuracy of surface measurements used for industrial inspection, medical applications, robotic vision and more.

Oct 26, 2024

AI-Powered Insights Reveal the Universe’s Fundamental Settings

Posted by Saúl Morales Rodriguéz in categories: cosmology, robotics/AI

Utilizing a novel AI-driven method, researchers enhanced the precision of estimating critical cosmological parameters by analyzing galaxy distributions.

This breakthrough allows for more refined studies of dark matter and energy, with implications for resolving the Hubble tension and other cosmic mysteries.

Continue reading “AI-Powered Insights Reveal the Universe’s Fundamental Settings” »

Oct 26, 2024

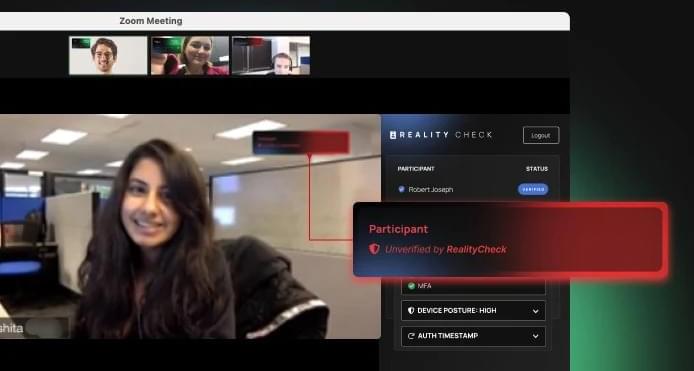

Eliminating AI Deepfake Threats: Is Your Identity Security AI-Proof?

Posted by Saúl Morales Rodriguéz in categories: robotics/AI, security

Combat AI impersonation fraud with Beyond Identity’s RealityCheck—your shield against deepfake attacks.

Oct 26, 2024

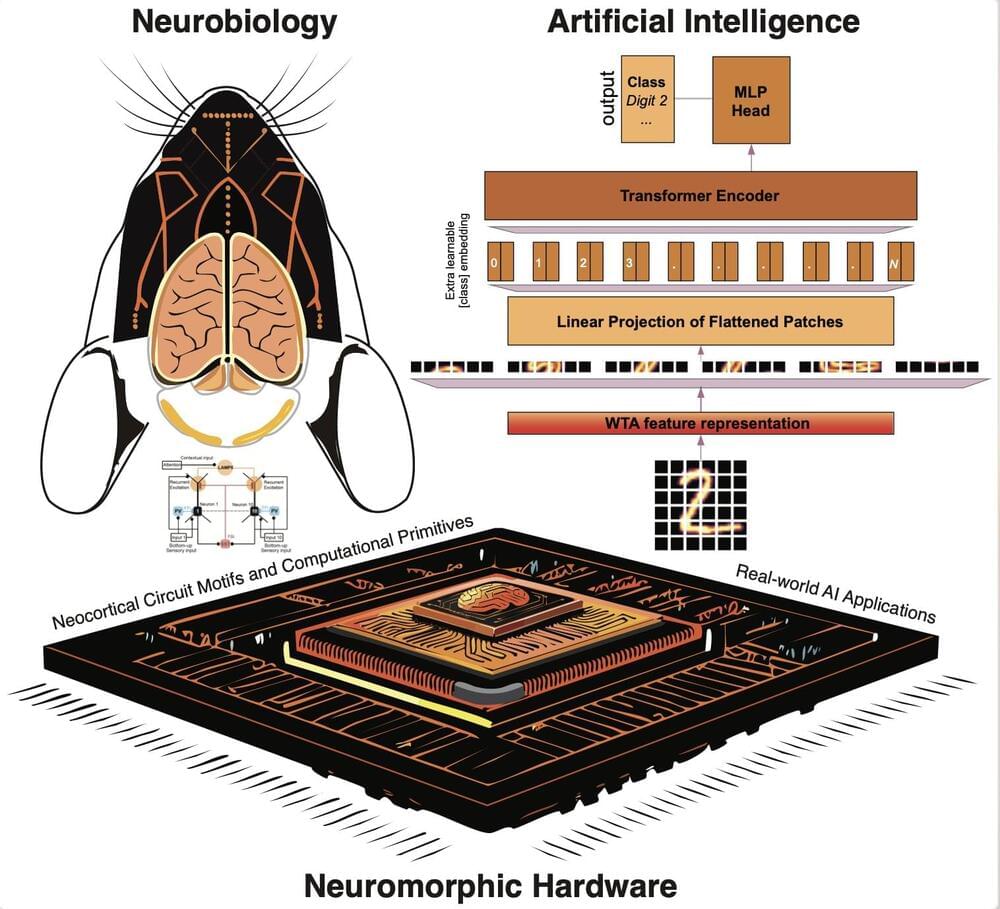

AI mimics neocortex computations with ‘winner-take-all’ approach

Posted by Dan Kummer in categories: biotech/medical, robotics/AI

Over the past decade or so, computer scientists have developed increasingly advanced computational techniques that can tackle real-world tasks with human-comparable accuracy. While many of these artificial intelligence (AI) models have achieved remarkable results, they often do not precisely replicate the computations performed by the human brain.

Researchers at Tibbling Technologies, Broad Institute at Harvard Medical School, The Australian National University and other institutes recently tried to use AI to mimic a specific type of computation performed by circuits in the neocortex, known as “winner-take-all” computations.

Their paper, published on the bioRxiv preprint server, reports the successful emulation of this computation and shows that adding it to transformer-based models could significantly improve their performance on image classification tasks.

Oct 26, 2024

Google plans to announce its next Gemini model soon

Posted by Dan Kummer in category: robotics/AI

Oct 25, 2024

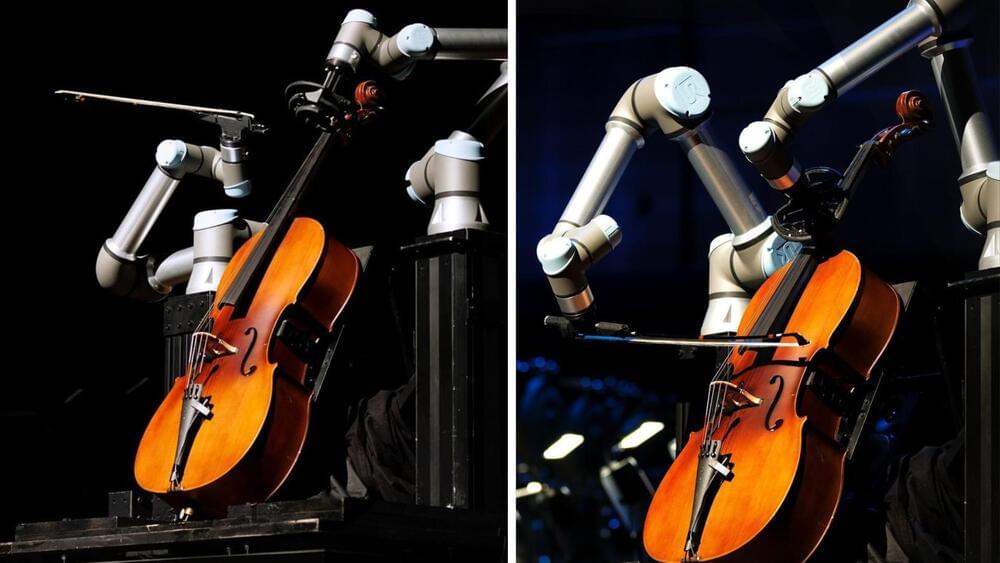

Robot plays cello delicately, makes history with Swedish orchestra

Posted by Shubham Ghosh Roy in categories: media & arts, robotics/AI

A robot played cello in a curated concert for the Malmö Symphony Orchestra in southern Sweden.

Robotics is driving innovations across various sectors nowadays. This time, a new robot has entered the music arena to transform it. In a recent video, the robot was spotted playing the cello.

The industrial robotic arms with 3D-printed parts performed with the members of the orchestra in Sweden.

Continue reading “Robot plays cello delicately, makes history with Swedish orchestra” »

Oct 25, 2024

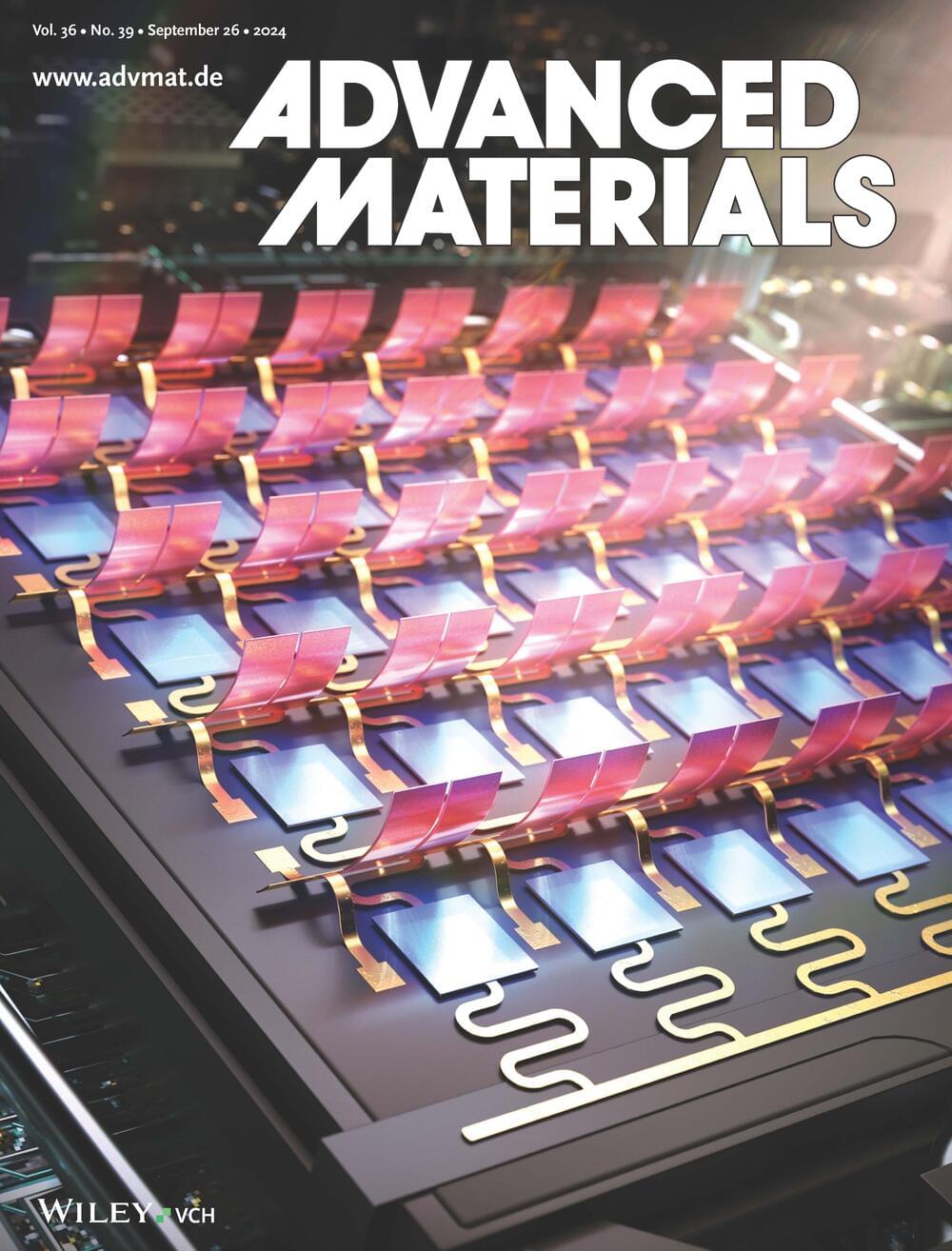

3D smart energy device integrates radiative cooling and solar absorption

Posted by Chima Wisdom in categories: robotics/AI, sustainability

A research team led by Professor Bonghoon Kim from DGIST’s Department of Robotics and Mechatronics Engineering has developed a “3D smart energy device” that features both reversible heating and cooling capabilities. Their device was recognized for its excellence and practicality through its selection as the cover article of the international journal Advanced Materials.

The team collaborated with Professor Bongjae Lee from KAIST’s Department of Mechanical Engineering and Professor Heon Lee from Korea University’s Department of Materials Science and Engineering.

Heating and cooling account for approximately 50% of the global energy consumption, contributing significantly to environmental problems such as global warming and air pollution. In response, solar absorption and radiative cooling devices, which harness the sun and outdoor air as heat and cold sources, are gaining attention as eco-friendly and sustainable solutions.