Give people a barrier, and at some point they are bound to smash through. Chuck Yeager broke the sound barrier in 1947. Yuri Gagarin burst into orbit for the first manned spaceflight in 1961. The Human Genome Project finished cracking the genetic code in 2003. And we can add one more barrier to humanity’s trophy case: the exascale barrier.

The exascale barrier represents the challenge of achieving exascale-level computing, which has long been considered the benchmark for high performance. To reach that level, however, a computer needs to perform a quintillion calculations per second. You can think of a quintillion as a million trillion, a billion billion, or a million million millions. Whichever you choose, it’s an incomprehensibly large number of calculations.

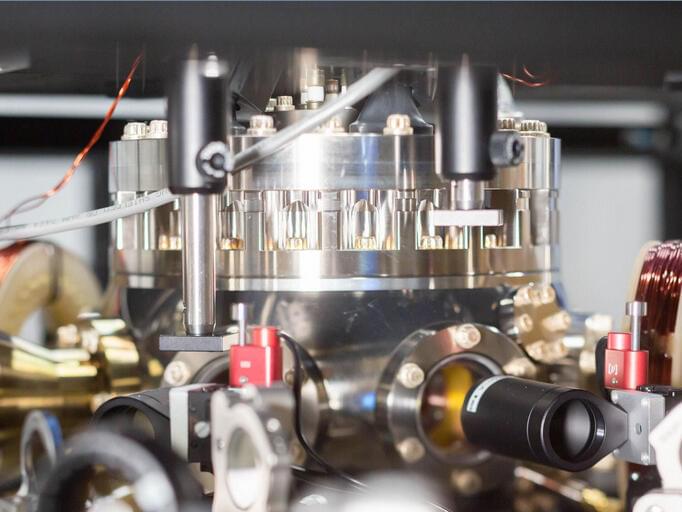

On May 27, 2022, Frontier, a supercomputer built by the Department of Energy’s Oak Ridge National Laboratory, managed the feat. It performed 1.1 quintillion calculations per second to become the fastest computer in the world.