Nov 29, 2018

Chaos Makes the Multiverse Unnecessary

Posted by Xavier Rosseel in categories: alien life, mathematics, supercomputing

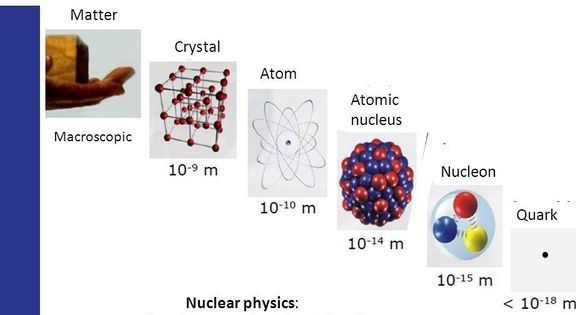

Scientists look around the universe and see amazing structure. There are objects and processes of fantastic complexity. Every action in our universe follows exact laws of nature that are perfectly expressed in a mathematical language. These laws of nature appear fine-tuned to bring about life, and in particular, intelligent life. What exactly are these laws of nature and how do we find them?

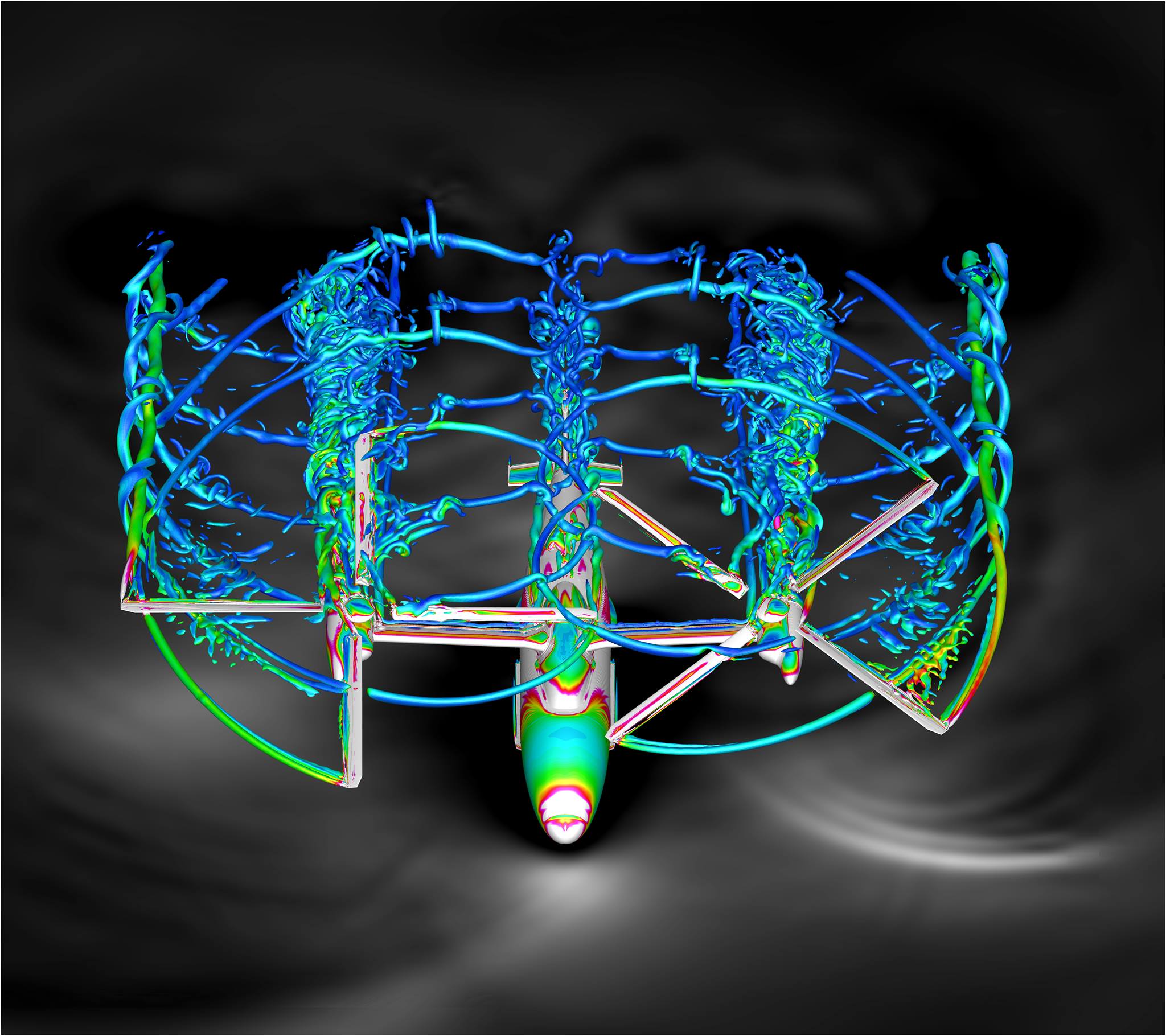

The universe is so structured and orderly that we compare it to the most complicated and exact contraptions of the age. In the 18th and 19th centuries, the universe was compared to a perfectly working clock or watch. Philosophers then discussed the Watchmaker. In the 20th and 21st centuries, the most complicated object is a computer. The universe is compared to a perfectly working supercomputer. Researchers ask how this computer got its programming.

How does one explain all this structure? Why do the laws seem so perfect for producing life and why are they expressed in such exact mathematical language? Is the universe really as structured as it seems?