Aug 16, 2024

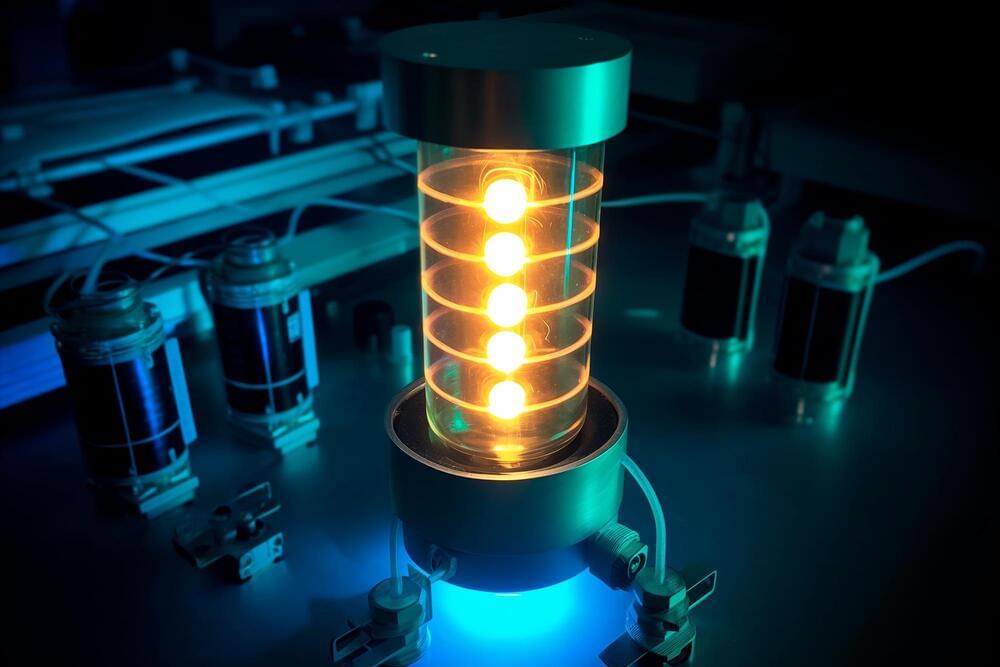

Researchers Develop Innovative Battery Recycling Method

Posted by Natalie Chan in categories: engineering, sustainability, transportation

A research team at Rice University led by James Tour, the T.T. and W.F. Chao Professor of Chemistry and professor of materials science and nanoengineering, is tackling the environmental issue of efficiently recycling lithium ion batteries amid their increasing use.

The team has pioneered a new method to extract purified active materials from battery waste as detailed in the journal Nature Communications on July 24. Their findings have the potential to facilitate the effective separation and recycling of valuable battery materials at a minimal fee, contributing to a greener production of electric vehicles (EVs).

“With the surge in battery use, particularly in EVs, the need for developing sustainable recycling methods is pressing,” Tour said.