Oct 5, 2022

New shape memory alloy discovered through artificial intelligence framework

Posted by Dan Breeden in categories: robotics/AI, transportation

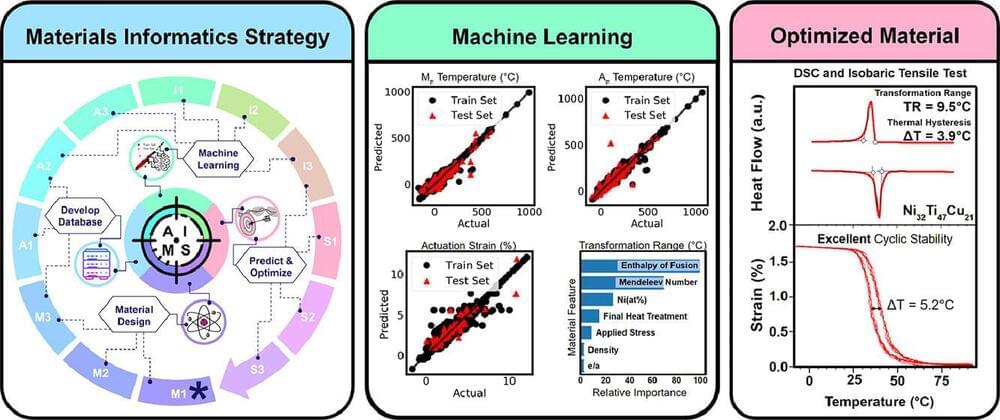

Researchers from the Department of Materials Science and Engineering at Texas A&M University have used an Artificial Intelligence Materials Selection framework (AIMS) to discover a new shape memory alloy. The shape memory alloy showed the highest efficiency during operation achieved thus far for nickel-titanium-based materials. In addition, their data-driven framework offers proof of concept for future materials development.

This study was recently published in the Acta Materialia journal.

Shape memory alloys are utilized in various fields where compact, lightweight and solid-state actuations are needed, replacing hydraulic or pneumatic actuators because they can deform when cold and then return to their original shape when heated. This unique property is critical for applications, such as airplane wings, jet engines and automotive components, that must withstand repeated, recoverable large-shape changes.