Cellular senescence, a permanent state of replicative arrest in otherwise proliferating cells, is a hallmark of ageing and has been linked to ageing-related diseases like cancer. Senescent cells have been shown to accumulate in tissues of aged organisms which in turn can lead to chronic inflammation. Many genes have been associated with cell senescence, yet a comprehensive understanding of cell senescence pathways is still lacking. To this end, we created CellAge (http://genomics.senescence.info/cells), a manually curated database of 279 human genes associated with cellular senescence, and performed various integrative and functional analyses. We observed that genes promoting cell senescence tend to be overexpressed with age in human tissues and are also significantly overrepresented in anti-longevity and tumour-suppressor gene databases. By contrast, genes inhibiting cell senescence overlapped with pro-longevity genes and oncogenes. Furthermore, an evolutionary analysis revealed a strong conservation of senescence-associated genes in mammals, but not in invertebrates. Using the CellAge genes as seed nodes, we also built protein-protein interaction and co-expression networks. Clusters in the networks were enriched for cell cycle and immunological processes. Network topological parameters also revealed novel potential senescence-associated regulators. We then used siRNAs and observed that of 26 candidates tested, 19 induced markers of senescence. Overall, our work provides a new resource for researchers to study cell senescence and our systems biology analyses provide new insights and novel genes regarding cell senescence.

Not only has Su Metcalfe’s treatment succeeded in early trials, it involves zero drugs and no side effects—and it could begin human trials as soon as 2020.

Any thoughts?

What the nervous system of the roundworm tells us about freezing brains and reanimating human minds.

Saving Senegal’s mangroves

Posted in sustainability

Haidar el Ali’s reforestation project has planted 152 million mangrove buds in southern Senegal over the past decade!

Oklahoma Attorney General Mike Hunter had claimed that J&J and its pharmaceutical subsidiary Janssen aggressively marketed to doctors and downplayed the risks of opioids as early as the 1990s. The state said J&J’s sales practices created an oversupply of the addictive painkillers and “a public nuisance” that upended lives and would cost the state $12.7 billion to $17.5 billion. The state was seeking more than $17 billion from the company.

J&J, which marketed the opioid painkillers Duragesic and Nucynta, has denied any wrongdoing. Lawyers for the company disputed the legal basis Oklahoma used to sue J&J, relying on a “public nuisance” claim. They said the state has previously limited the act to disputes involving property or public spaces.

Investors were expecting J&J to be fined between $500 million and $5 billion, according to Evercore ISI analyst Elizabeth Anderson.

A polymer that self-destructs? While once a fictional idea, new polymers now exist that are rugged enough to ferry packages or sensors into hostile territory and vaporize immediately upon a military mission’s completion. The material has been made into a rigid-winged glider and a nylon-like parachute fabric for airborne delivery across distances of a hundred miles or more. It could also be used someday in building materials or environmental sensors.

The researchers will present their results today at the American Chemical Society (ACS) Fall 2019 National Meeting & Exposition.

“This is not the kind of thing that slowly degrades over a year, like the biodegradable plastics that consumers might be familiar with,” says Paul Kohl, Ph.D., whose team developed the material. “This polymer disappears in an instant when you push a button to trigger an internal mechanism or the sun hits it.” The disappearing polymers were developed for the Department of Defense, which is interested in deploying electronic sensors and delivery vehicles that leave no trace of their existence after use, thus avoiding discovery and alleviating the need for device recovery.

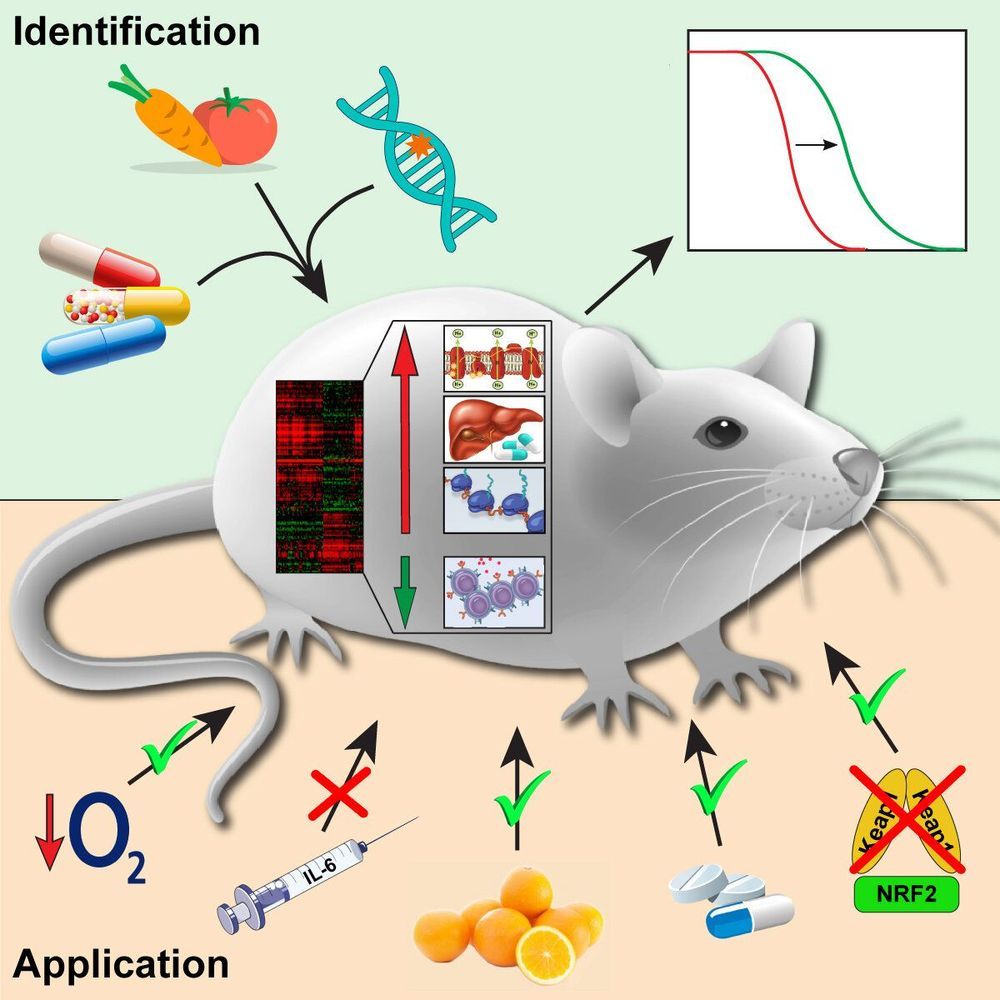

An international group of scientists studied the effects of 17 lifespan-extending interventions on gene activity in mice and discovered genetic biomarkers of longevity. The results of their study were published in the journal Cell Metabolism.

Nowadays, dozens of interventions are known that extend the lifespan of various living organisms ranging from yeast to mammals. They include chemical compounds (e.g. rapamycin), genetic interventions (e.g. mutations associated with disruption of growth hormone synthesis), and diets (e.g. caloric restriction). Some targets of these interventions have been discovered. However, there is still no clear understanding of the systemic molecular mechanisms leading to lifespan extension.

A group of scientists from Skoltech, Moscow State University and Harvard University decided to fill this gap and identify crucial molecular processes associated with longevity. To do so, they looked at the effects of various lifespan-extending interventions on the activity of genes in a mouse, a commonly used model organism closely related to humans.

In China, scoring citizens’ behavior is official government policy. U.S. companies are increasingly doing something similar, outside the law.

[Images: Rawf8/iStock; zhudifeng/iStock].

Meet Margaret, the Super Ager — could people like her teach us how to keep our brains younger for longer?

Humans can communicate a range of nonverbal emotions, from terrified shrieks to exasperated groans. Voice inflections and cues can communicate subtle feelings, from ecstasy to agony, arousal and disgust. Even when simply speaking, the human voice is stuffed with meaning, and a lot of potential value if you’re a company collecting personal data.

Now, researchers at the Imperial College London have used AI to mask the emotional cues in users’ voices when they’re speaking to internet-connected voice assistants. The idea is to put a “layer” between the user and the cloud their data is uploaded to by automatically converting emotional speech into “normal” speech. They recently published their paper “Emotionless: Privacy-Preserving Speech Analysis for Voice Assistants” on the arXiv preprint server.

Our voices can reveal our confidence and stress levels, physical condition, age, gender, and personal traits. This isn’t lost on smart speaker makers, and companies such as Amazon are always working to improve the emotion-detecting abilities of AI.