The statement that may not be obvious is that the sleeping giant, Google has woken up, and they are iterating on a pace that will smash GPT-4 total pre-training FLOPS by 5x before the end of the year. The path is clear to 100x by the end of next year given their current infrastructure buildout. Whether Google has the stomach to put these models out publicly without neutering their creativity or their existing business model is a different discussion.

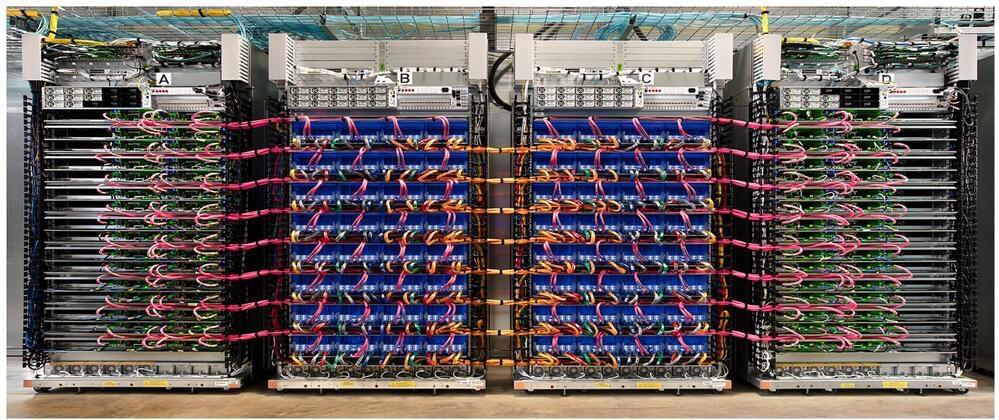

Today we want to discuss Google’s training systems for Gemini, the iteration velocity for Gemini models, Google’s Viperfish (TPUv5) ramp, Google’s competitiveness going forward versus the other frontier labs, and a crowd we are dubbing the GPU-Poor.

Access to compute is a bimodal distribution. There are a handful of firms with 20k+ A/H100 GPUs, and individual researchers can access 100s or 1,000s of GPUs for pet projects. The chief among these are researchers at OpenAI, Google, Anthropic, Inflection, X, and Meta, who will have the highest ratios of compute resources to researchers. A few of the firms above as well as multiple Chinese firms will 100k+ by the end of next year, although we are unsure of the ratio of researchers in China, only the GPU volumes.

Comments are closed.