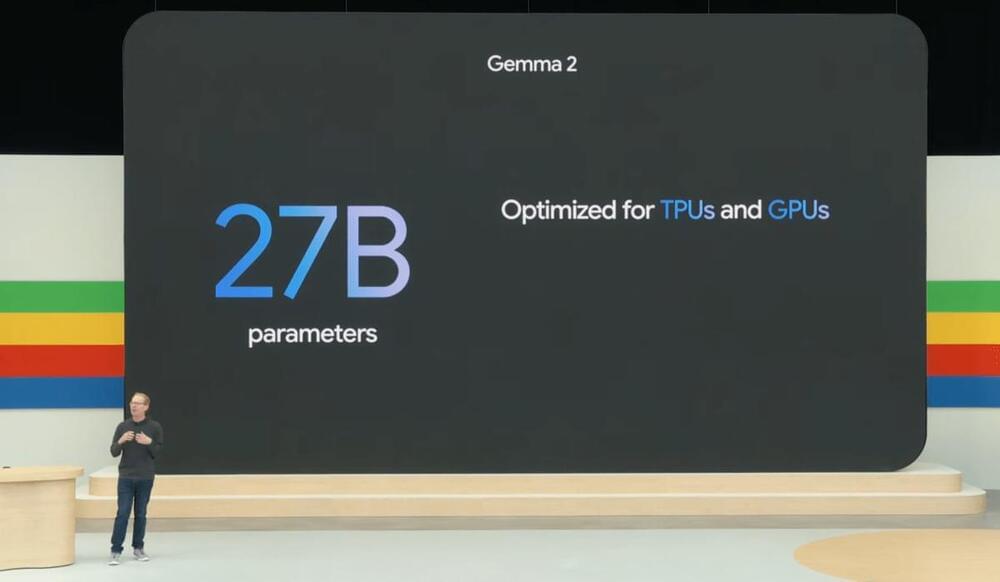

The headline-grabbing release here is Gemma 2, the next generation of Google’s open-weights Gemma models, which will launch with a 27 billion parameter model in June.

Already available is PaliGemma, a pre-trained Gemma variant that Google describes as “the first vision language model in the Gemma family” for image captioning, image labeling and visual Q&A use cases.

So far, the standard Gemma models, which launched earlier this year, were only available in 2-billion-parameter and 7-billion-parameter versions, making this new 27-billion model quite a step up.

Leave a reply