A team of Apple researchers has found that advanced AI models’ alleged ability to “reason” isn’t all it’s cracked up to be.

“Reasoning” is a word that’s thrown around a lot in the AI industry these days, especially when it comes to marketing the advancements of frontier AI language models. OpenAI, for example, recently dropped its “Strawberry” model, which the company billed as its next-level large language model (LLM) capable of advanced reasoning. (That model has since been renamed just “o1.”)

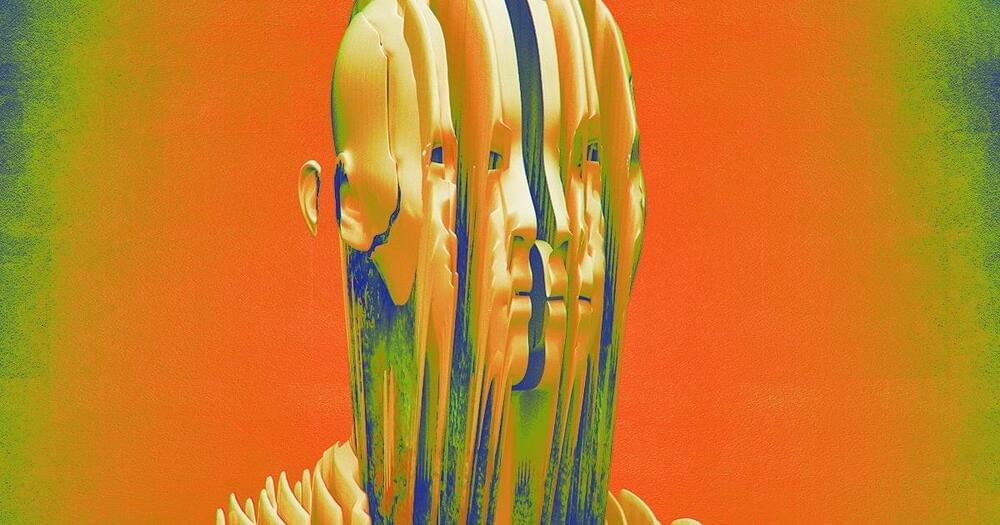

But marketing aside, there’s no agreed-upon industrywide definition for what reasoning exactly means. Like other AI industry terms, for example, “consciousness” or “intelligence,” reasoning is a slippery, ephemeral concept; as it stands, AI reasoning can be chalked up to an LLM’s ability to “think” its way through queries and complex problems in a way that resembles human problem-solving patterns.

Leave a reply