Jan 7, 2015

CROSS-FUNCTIONAL AWAKEN, YET CONDITIONALIZED CONSCIOUSNESS AS PER NON-GIRLIE U.S. HARD ROCKET SCIENTISTS! By Mr. Andres Agostini

Posted by Andres Agostini in categories: business, complex systems, defense, disruptive technology, economics, education, engineering, ethics, existential risks, finance, futurism, innovation, physics, science, security, strategy

CROSS-FUNCTIONAL AWAKEN, YET CONDITIONALIZED CONSCIOUSNESS AS PER NON-GIRLIE U.S. HARD ROCKET SCIENTISTS!

(Excerpted from the White Swan Book)

Sequential and Progressive Tidbits as Follows:

Quoted: “Tony Williams, the founder of the British-based legal consulting firm, said that law firms will see nearly all their process work handled by artificial intelligence robots. The robotic undertaking will revolutionize the industry, “completely upending the traditional associate leverage model.” And: “The

Quoted: “Tony Williams, the founder of the British-based legal consulting firm, said that law firms will see nearly all their process work handled by artificial intelligence robots. The robotic undertaking will revolutionize the industry, “completely upending the traditional associate leverage model.” And: “The

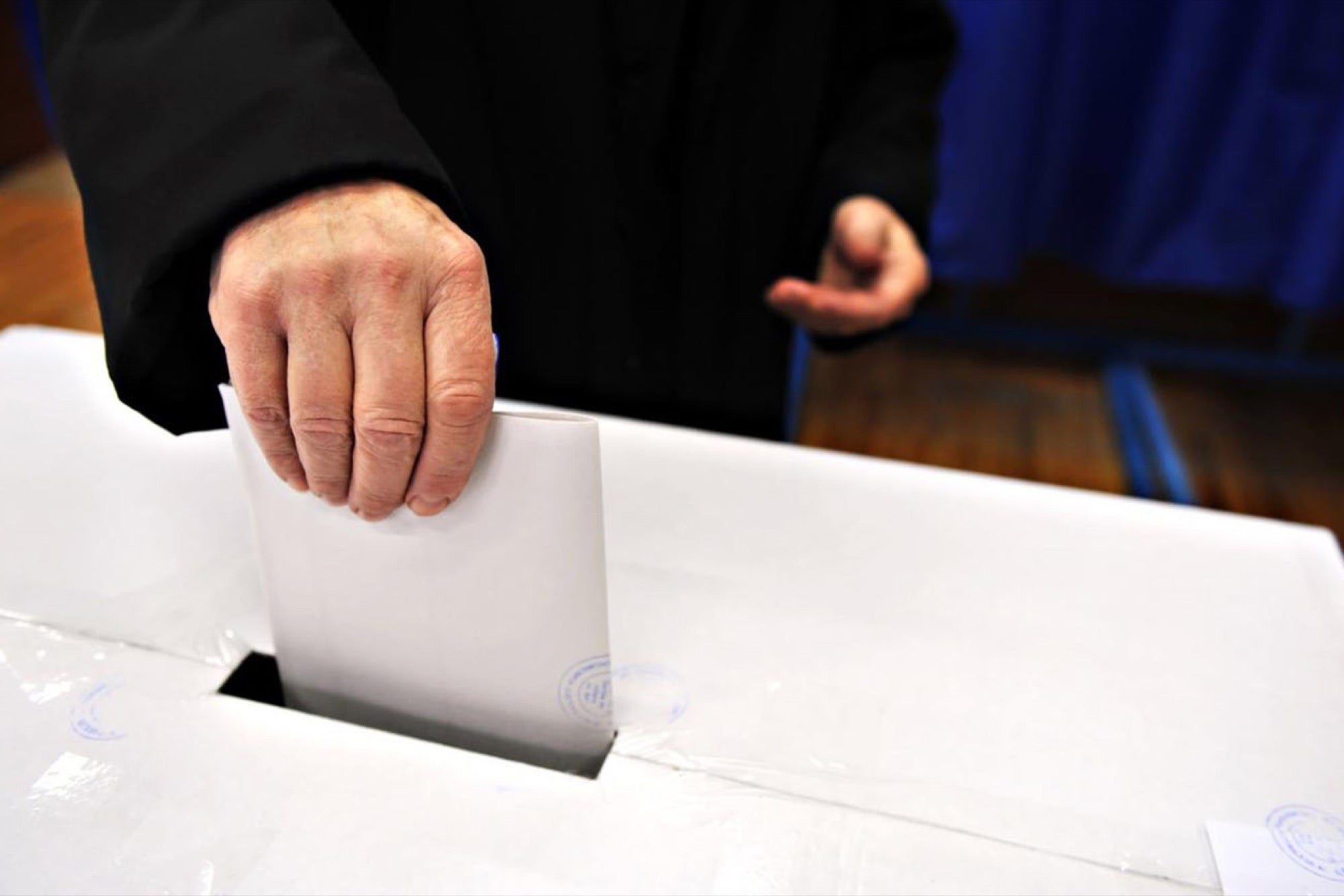

Quoted: “Bitcoin technology offers a fundamentally different approach to vote collection with its decentralized and automated secure protocol. It solves the problems of both paper ballot and electronic voting machines, enabling a cost effective, efficient, open system that is easily audited by both individual voters and the entire community. Bitcoin technology can enable a system where every voter can verify that their vote was counted, see votes for different candidates/issues cast in real time, and be sure that there is no fraud or manipulation by election workers.”

Quoted: “Bitcoin technology offers a fundamentally different approach to vote collection with its decentralized and automated secure protocol. It solves the problems of both paper ballot and electronic voting machines, enabling a cost effective, efficient, open system that is easily audited by both individual voters and the entire community. Bitcoin technology can enable a system where every voter can verify that their vote was counted, see votes for different candidates/issues cast in real time, and be sure that there is no fraud or manipulation by election workers.”