Feb 17, 2023

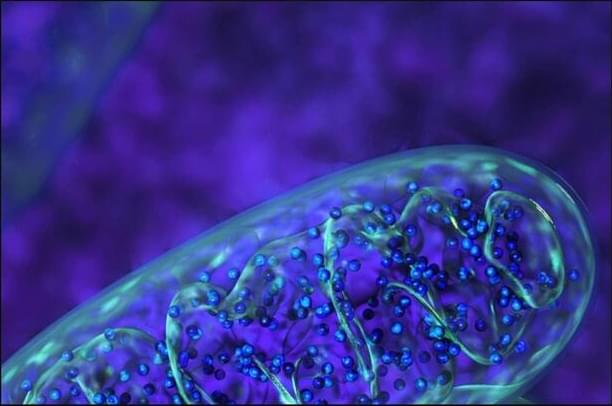

Sakuu Announces 3D-Printed Solid-State Battery Success

Posted by Omuterema Akhahenda in categories: innovation, sustainability

Over the past year or so, CleanTechnica has published several stories about Sakuu, the innovative battery company located in Silicon Valley (where else?) that is working to bring 3D-printed solid-state batteries to market.

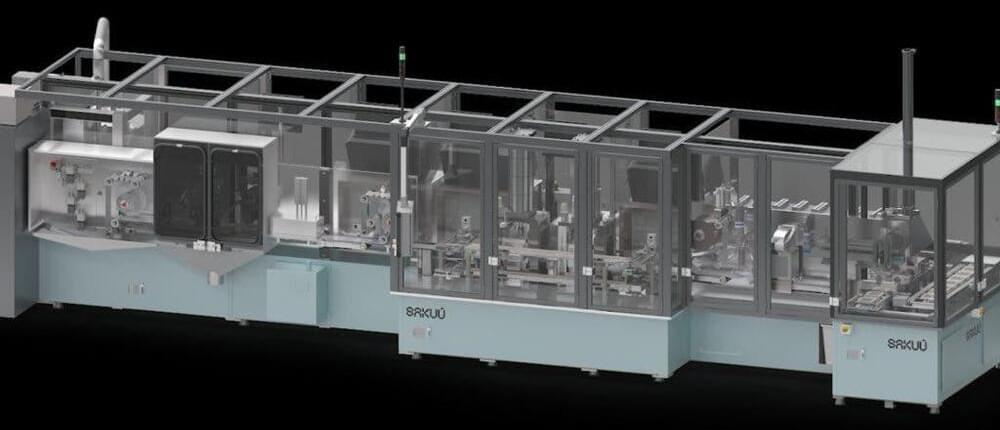

Last June, Robert Bagheri, founder and CEO of Sakuu, said in a press release, “As far as our solid state battery development, we are preparing to unveil a new category of rapid printed batteries manufactured at scale using our additive manufacturing platform. The sustainability and supply chain implications of this pioneering development will be transformational.” Based on the company’s Kavian platform, the rapid 3D-printed batteries will enable customizable, mass scale, and cost effective manufacturing of solid-state batteries while solving fundamental challenges confronting battery manufacturers today, the company said at that time.