From UPenn, Google Deepmind, & NVIDIA Introducing🎓, our latest effort pushing the frontier of robot learning using LLMs!

From upenn, google deepmind, & NVIDIA

Introducing🎓, our latest effort pushing the frontier of robot learning using LLMs!

From UPenn, Google Deepmind, & NVIDIA Introducing🎓, our latest effort pushing the frontier of robot learning using LLMs!

From upenn, google deepmind, & NVIDIA

Introducing🎓, our latest effort pushing the frontier of robot learning using LLMs!

Elon Musk confirms Tesla’s humanoid robot Optimus will soon have hands with 22 Degrees of Freedom, enhancing its dexterity for complex tasks.

Microsoft is said to be building an OpenAI competitor despite its multi-billion-dollar partnership with the firm — and according to at least one insider, it’s using GPT-4 data to do so.

First reported by The Information, the new large language model (LLM) is apparently called MAI-1, and an inside source told the website that Microsoft is using GPT-4 and public information from the web to train it out.

MAI-1 may also be trained on datasets from Inflection, the startup previously run by Google DeepMind cofounder Mustafa Suleyman before he joined Microsoft as the CEO of its AI department earlier this year. When it hired Suleyman, Microsoft also brought over most of Inflection’s staff and folded them into Microsoft AI.

In this talk at Mindfest 2024, Hartmut Neven proposes that conscious moments are generated by the formation of quantum superpositions, challenging traditional views on the origins of consciousness. Please consider signing up for TOEmail at https://www.curtjaimungal.org.

Support TOE: — Patreon: https://patreon.com/curtjaimungal (early access to ad-free audio episodes!) — Crypto: https://tinyurl.com/cryptoTOE — PayPal: https://tinyurl.com/paypalTOE — TOE Merch: https://tinyurl.com/TOEmerch … see more.

Danko nikolic: practopoiesis tells us machine learning isn’t enough!

Danko Nikolic’s theory of Practopoiesis can help us not only understand the mind but also create AI. Check out his interview for more!

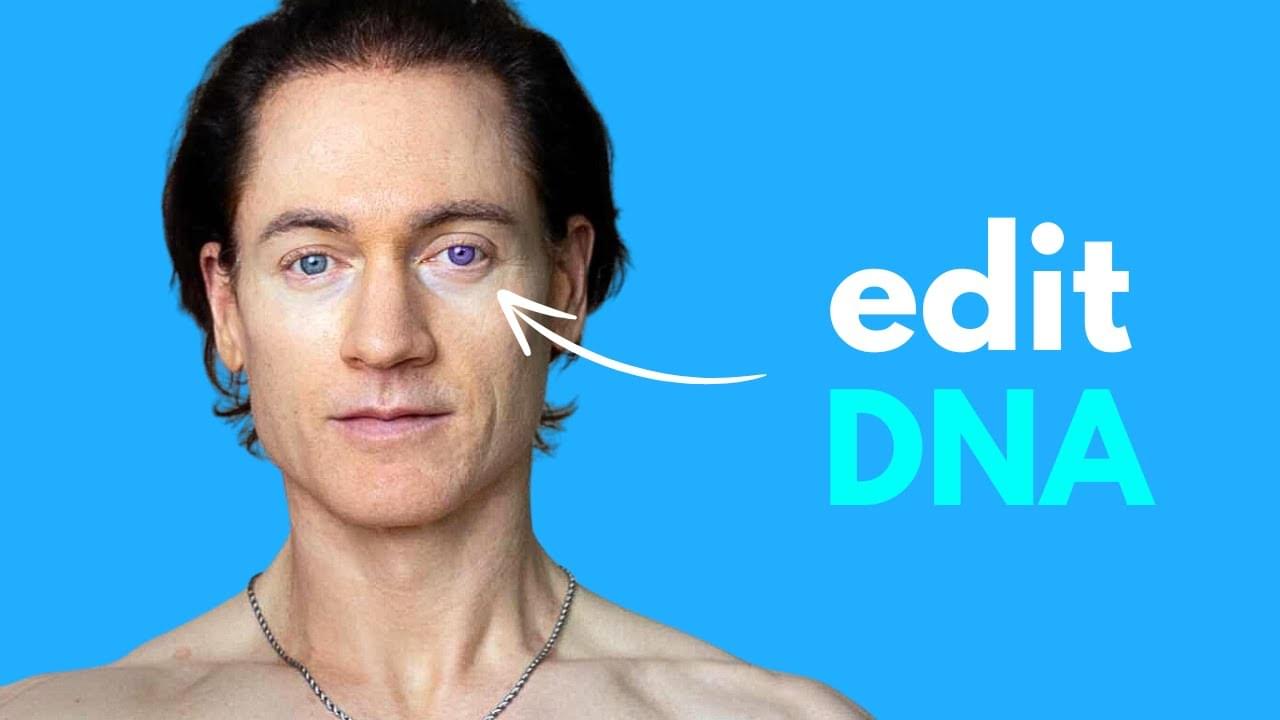

What if AI was trained to create & edit DNA?#ainews #airesearch #science #biotech #medicine #dna #ai #agi #singularityNewsletter: https://aisearch.substack.c…

Driving at night might be a scary challenge for a new driver, but with hours of practice it soon becomes second nature. For self-driving cars, however, practice may not be enough because the lidar sensors that often act as these vehicles’ “eyes” have difficulty detecting dark-colored objects. Research published in ACS Applied Materials & Interfaces describes a highly reflective black paint that could help these cars see dark objects and make autonomous driving safer.

Lidar, short for light detection and ranging, is a system used in a variety of applications, including geologic mapping and self-driving vehicles. The system works like echolocation, but instead of emitting sound waves, lidar emits tiny pulses of near-infrared light. The light pulses bounce off objects and back to the sensor, allowing the system to map the 3D environment it’s in. But lidar falls short when objects absorb more of that near-infrared light than they reflect, which can occur on black-painted surfaces. Lidar can’t detect these dark objects on its own, so one common solution is to have the system rely on other sensors or software to fill in the information gaps. However, this solution could still lead to accidents in some situations. Rather than reinventing the lidar sensors, though, Chang-Min Yoon and colleagues wanted to make dark objects easier to detect with existing technology by developing a specially formulated, highly reflective black paint.

Microsoft is developing a new generative AI model that will take lots of data and energy to train, according to a new report from The Information published Monday.

Two Microsoft employees tell the outlet that the model has been dubbed MAI-1 internally and is being developed by a team led by Mustafa Suleyman. The ex-Google AI executive worked at AI firm Inflection before Microsoft bought Inflection’s IP and poached most of its staff, including Suleyman, who joined the tech giant in March. The employees say that MAI-1 is separate from Inflection’s Pi models.

Microsoft is reportedly reserving lots of servers with Nvidia graphics cards to train MAI-1, which is expected to be bigger than Microsoft’s previous open-source AI models. This means it will consume tons of electricity during its training phase—a broader issue researchers flag as harmful to the environment. Microsoft declined to comment on MAI-1, but linked to a Monday post from CTO Kevin Scott which states that Microsoft is and will continue to build AI models, some of which “have names like Turing, and MAI.”

String theory could provide a theory of everything for our universe—but it entails 10500 (more than a centillion) possible solutions. AI models could help to find the right one.

Tiny little threads whizzing through spacetime and vibrating incessantly: this is roughly how you can imagine the universe, according to string theory. The various vibrations of the threads generate the elementary particles, such as electrons and quarks, and the forces acting among them.

See how the LinkedIn co-founder explores the limits of AI with a digital clone that looks, sounds, and even breathes like him.