May 31, 2022

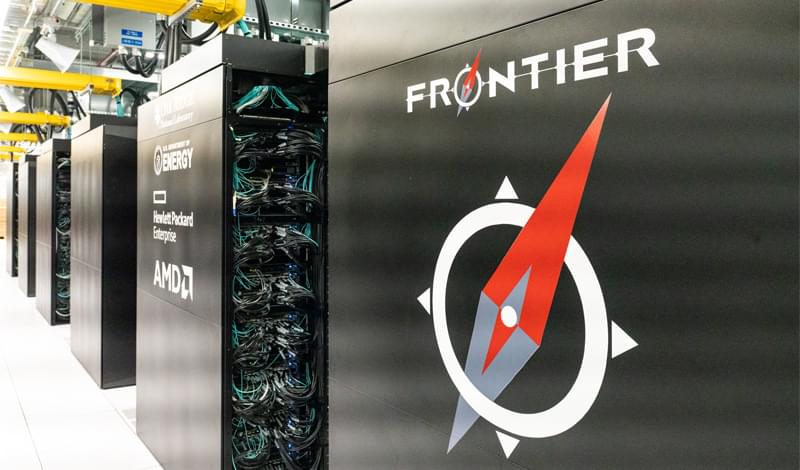

World’s first exascale supercomputer Frontier smashes speed records

Posted by Jose Ruben Rodriguez Fuentes in categories: biotech/medical, supercomputing

The world’s first exascale computer, capable of performing a billion billion operations per second, has been built by Oak Ridge National Laboratory (ORNL) in the US.

A typical laptop is only capable of a few teraflops, or a trillion operations per second, which is a million times less. The exaflop machine, called Frontier, could help solve a range of complex scientific problems, such as accurate climate modelling, nuclear fusion simulation and drug discovery.

“Frontier will offer modelling and simulation capabilities at the highest level of computing performance,” says Thomas Zacharia at ORNL.